Transposed ConvNets Tutorial (Deconvolution)

We have discussed on the convolutional neural networks in the previous tutorials with examples in tensorflow, in this, I will introduce the transposed convolution also called as the deconvolution or the inverse of convolution with the experiments I did in tensorflow.

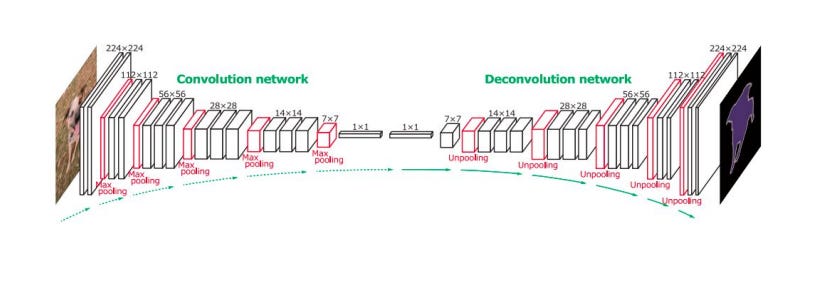

Transpose convolution is the reverse process of the convolution, it is used when we have to generate and output having a shape of an input. In convolution, we saw that a pyramid shape was made, but in transpose it would change to the desired shape, usually similar to the input.

Previously, I attached an ultimate guide for the mathematical modeling and relationship equations of the convnets, that guide also includes a detailed version of transpose convolution.

The below image gives you a clear view of how the transpose works.

The tensorflow has a transpose function denoted by tf.nn.conv2d_transpose, using this would give us the transpose.

I experimented with the previous version of simple CNN, just a little bit change, replace the model function with the following new modified function with transpose.

def model(data):

dyn_input_shape = data.get_shape().as_list()

batch_size = dyn_input_shape[0]

output_shape = tf.pack([batch_size, 28, 28, 16])

conv = tf.nn.conv2d(data, layer1_weights, [1, 2, 2, 1], padding='SAME')

hidden = tf.nn.relu(conv + layer1_biases)

conv = tf.nn.conv2d_transpose(hidden, layer2_weights, output_shape, [1, 2, 2, 1], padding='SAME')

hidden = tf.nn.relu(conv + layer2_biases)

shape = hidden.get_shape().as_list()

reshape = tf.reshape(hidden, [shape[0], shape[1] * shape[2] * shape[3]])

hidden = tf.nn.relu(tf.matmul(reshape, layer3_weights) + layer3_biases)

return tf.matmul(hidden, layer4_weights) + layer4_biases

You will notice here how the shape of the output is decreased in the conv2d part and again increased to the input size, keeping the depth same for fully connected layers.

In conv2d stride and input shape generate a suitable output shape, while in conv2d_transpose stride and output shape generate an input shape.

After the modification I was able to get 92.5% accuracy, a slight increase as compared to convnets.

One more thing to note here is the batch size should be same for all the datasets, we have 16 batch size for training but 500 for validation and test. We bring a minor change in the function which is to compute the batch size on the run time because it has to be same with the output shape batch size. Also, computing the transpose would take more time because of more matrix multiplication, so be patient while training.

For confusions or problems, get full code from the github repository.

This code is just used for testing purpose to see how the transpose works with single layer of CNN. I would recommend you to try and come up with something new, add more transpose layers, try out with different sizes, and also include the regularization. Don’t hesitate to share with the community.