Transition: Software Engineer to Data Engineer

Interested in working in the data engineering landscape, check out this natural transition for SWE.

Last month, I shared article on Types of Data Engineers with the goal to start a series that will cover the transition from various roles to Data Engineering. Today I am kicking off the series with Software to Data Engineering Transition.

Key Points:

The article will cover the natural way to transition from Software Engineer to Data Engineer.

The focus would be for the folks who already have some experience.

This may not apply to all types of Software Engineers. Quickest transition for SWE working in infra.

A place where Data Engineering and Software Engineering overlaps, giving you an option to work on both while retaining your title.

This can be leveraged in the reverse direction: Data Engineer to Software Engineer Transition.

Why this Transition?

With the rise in AI in the last few years, Data has become more important and folks are looking to work closely with new AI trend and with Data being one of the most important, its worth to read this article.

Let’s dive:

In the last article here, I shared the following six expertise from a Data Engineering perspective:

Data Infrastructure Expert

Data Tooling Expert

Data Pipeline Expert

Data Modeling Expert

Data Visualization Expert

Data Domain Expert

To make the transition as natural and simple as possible, the best one to consider are the first two the Data Infra and Tooling Expertise. These two expertise are a common place where Data and Software Engineering overlaps.

⭐ I call this transition very natural as this gives you chance to work closer to Data Engineering landscape while applying your Software Engineering skills. Depending on the company you may be able to keep Software Engineer Title.

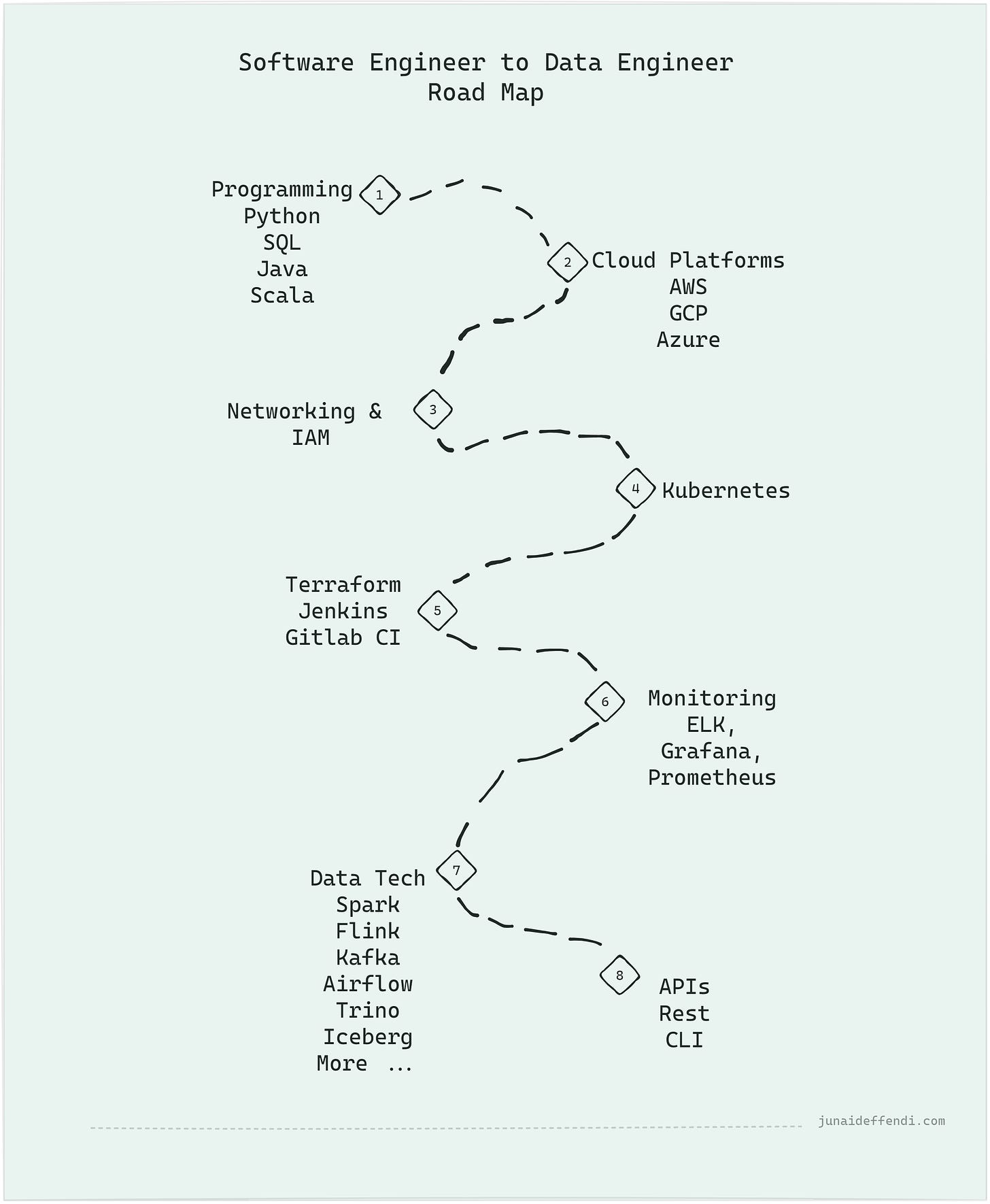

Roadmap

It is pretty challenging to cover everything, I will focus on the most important tech and tooling based on industry standards and my personal experience.

💡You don’t need to know everything in the roadmap.

If you want to be successful in this you need to be great at DevOps, Infra and Data Engineering fundamentals and definitely Software Engineering.

💡Based on your experience you can easily plan and learn the most useful and missing components and go from there. E.g. if you are a Software Engineer with Infra experience, you can directly start at `7`.

Programming

Having solid OOP is great for building a reusable and scalable data platform.

Python: Super relevant in today’s data world, most recent data tooling are written in Python. Easiest path to entry as well.

Java: May not be seen that much but still widely used in big tech.

Scala: Still relevant for Spark due to its performance at scale.

SQL: All time data language.

Cloud Platform

Cloud Platforms are the backbone of most tech companies today.

AWS: Understanding the core services like EC2, S3, RDS, Lambda then moving on to data-related services Redshift, EMR, Glue, etc.

GCP: Understanding the core services like Compute Engine, Cloud Storage, Cloud SQL then moving on to data-related services BigQuery, Dataflow, Dataproc, etc.

Azure: Understanding the core services like Virtual Machines, Blob Storage, SQL Database then moving on to data-related services Synapse Analytics, Data Factory, HDInsight, etc.

Infra & DevOps

IaaC: Terraform is very popular choice, its worth to have that skill.

CI/CD: Building scalable deployment pipelines is something to look for using tools like Gitlab CI and Jenkins.

Kubernetes: K8 is the backbone of many companies’ infrastructure today. Most data technologies work well with K8.

Networking: Deploying in cloud requires the understanding of networking concepts and tools like VPCs, subnets, and managing network traffic.

IAM (Identity and Access Management): User roles, service roles, permissions, are required for a scalable and secured software.

Observability: For monitoring, logging and and alerting, ELK, Grafana and Prometheus can be a good choice.

Data Technologies (Open Source)

You are most likely to use the below tech or a pretty close alternate. The goal would be to provide these tech to end users, in smaller companies you may also use it to build pipelines (Data Pipeline Expert)

Apache Spark: Popular for Batch Processing.

Apache Flink: Popular for Real Time processing.

Apache Kafka: Popular Message broker.

Apache Airflow: Popular for orchestrating data pipelines.

Apache Trino: Popular for providing SQL layer on top of data lake.

Apache Iceberg: Popular open table format, works well with Trino.

💡There is alot more, checkout: Data Quality, Data Catalogue and more here.

Notable mention of popular commercial data tech: Databricks and Snowflake that are also widely used across large non tech enterprises and small to midsize tech companies.

APIs

APIs are needed for interacting with internal or external data tooling, it could be for the above data tech or for in house built libraries and services like Data Quality Library, Config Driven Orchestration Web Service.

REST APIs: Understand HTTP methods, status codes, and designing endpoints.

CLI Packages: Command-line tools and packages that help in data processing and management, such as Cloud Platform CLI, custom CLI packages and libraries.

The following data tech articles could also help you with the transition:

💬 Since this roadmap can cover much more, I have definitely missed something important, please let me know in the comments.

Great read!

Really like the visual roadmap! Furthering your point on developing new skills with big data tools, there's also the learning curve associated with data pipeline design, data modelling and adapting a data first mindset!