Transition: Data Scientist to Data Engineer

Interested in working in the data engineering landscape, check out this natural transition for Data Scientist.

Continuing the series of Transition into Data Engineering with second article for my Data Scientists friends. Last time I shared the natural transition for SWEs.

Key Points:

The article will cover the natural way to transition from Data Scientist to Data Engineer.

The focus would be for the folks who already have some experience.

This may not apply to all types of Data Scientists. Quickest transition for DS who already doing informal Data Engineering.

A place where Data Engineering and Data Science overlaps.

Why this Transition?

Data scientists are already great with data (Domain Experts), but gaining data engineering skills enhances their impact. Data science was once on top, data engineering is now in demand, with growing opportunities driven by AI.

Let’s dive:

In the initial article here, I shared the following six expertise from a Data Engineering perspective:

Data Infrastructure Expert

Data Tooling Expert

Data Pipeline Expert

Data Modeling Expert

Data Visualization Expert

Data Domain Expert

To make the transition as natural and impactful as possible, the best ones to consider are the Data Pipeline, Modeling and Domain Expertise. These three expertise are a common place where Data Engineering and Science overlaps, many DS folks are already doing the first two but in an informal way.

⭐ I call this transition very impactful as this gives you a chance to take your Data skills to next level.

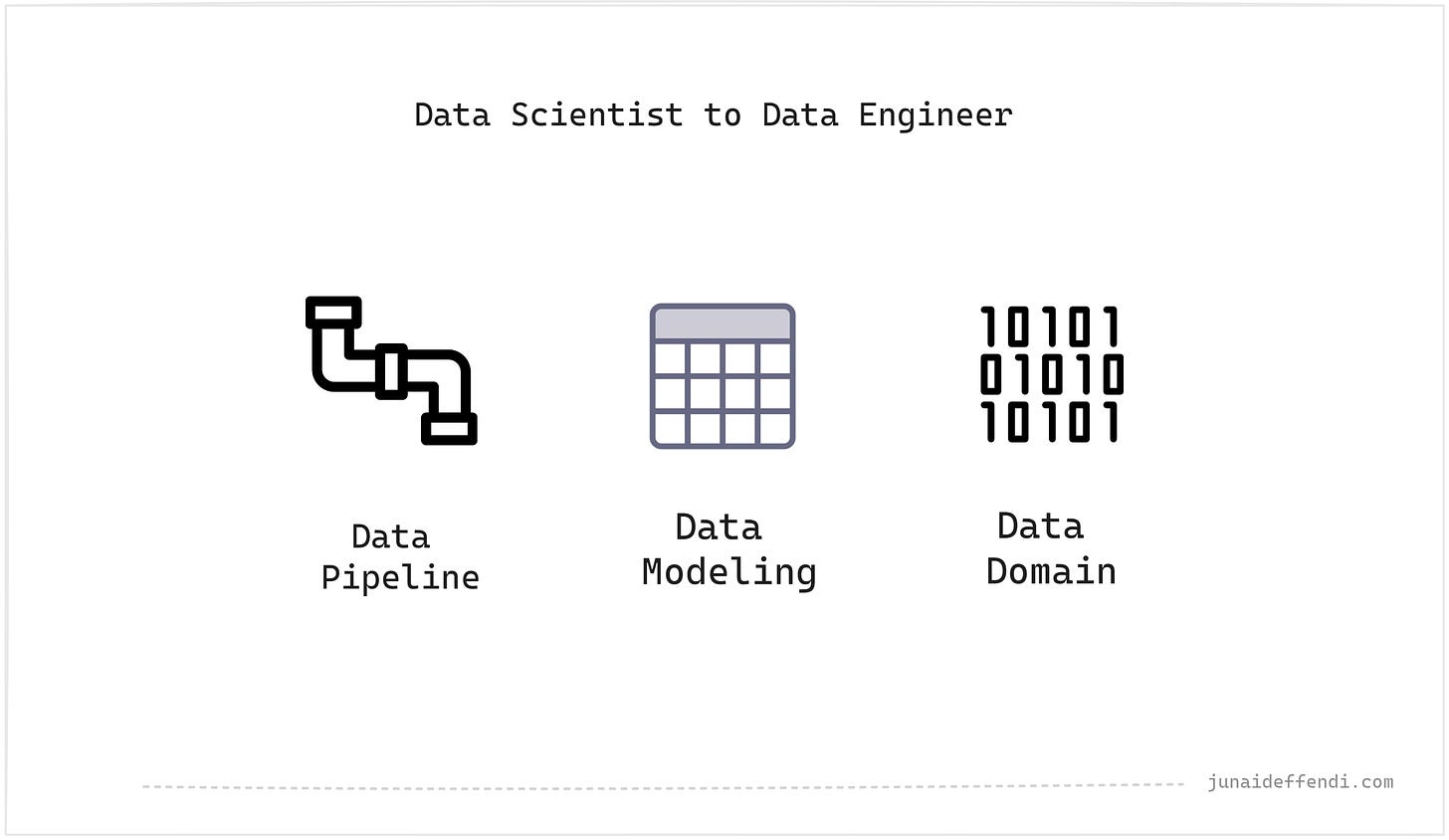

Roadmap

It is pretty challenging to cover everything, I will focus on the most important based on industry standards and my personal experience.

💡You don’t need to know everything in the roadmap.

If you want to be successful in this you need to formalize your Data Engineering knowledge for efficiency and scalability to be an effective Data Engineer.

💡Based on your experience you can easily plan and learn the most useful and missing components and go from there.

Programming

Having solid programming skills is great for building scalable data services.

Python: Super relevant in today’s data world, most recent data tooling are written in Python. Easiest path to entry as well.

SQL: All time data language.

Scala: Still relevant for Spark due to its performance at scale.

Cloud Platform

Cloud Platforms are the backbone of most tech companies today.

AWS: Understanding the core services like S3 then moving on to data-related services Redshift, EMR, etc.

GCP: Understanding the core services like Cloud Storage, Cloud SQL then moving on to data-related services BigQuery, Dataproc, etc.

Azure: Understanding the core services like Blob Storage then moving on to data-related services Synapse Analytics, Data Factory, etc.

DevOps

Git: Used everywhere as a version control system, learn the best practices.

CI/CD: Building scalable deployment pipelines is something to look for using tools like Gitlab CI and Jenkins.

Data Modeling

Data Scientist already does data modeling of some kind, formalize it for long term impact with the following common techniques.

Star: Very common dimensional data model.

Snowflake: Similar to Star but further normalized.

One Big Table: Modern approach with heavy denormalization for performance benefits.

Data Patterns

Data Scientists write pipelines everyday on top of the core dataset, to make them scalable and efficient learn the best practices of writing scalable pipelines.

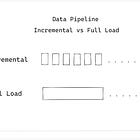

Batch: Pipeline that is performed in batches, e.g. per file or per scheduled interval and incremental or full load.

Streaming: Pipeline that is performed in continuous real time fashion, e.g. event driven systems.

Data Quality

Making sure to perform the validation for the data you are producing.

Write-Audit-Publish: This allows to catch data issues early in the pipeline before publishing for end users.

Invalid Table: This allows to make good data available to end users despite having some bad data which lands in different table.

Data Technologies (Open Source)

As a data scientist, you may have worked with below or a close alternate, the goal is to take this to next level by diving deep into each from Engineering aspect.

Apache Spark: Popular for Batch Processing.

Apache Airflow: Popular for orchestrating data pipelines.

Apache Trino: Popular for providing SQL layer on top of data lake.

Apache Iceberg: Popular open table format, works well with Trino.

Great Expectations: Popular open source data quality tool.

💡There is alot more, checkout here.

Domain Experts

Data Scientist are already experts on data, continue to understand data, use cases and how it helps the business keeps you effective as a Data Engineer.

The following articles will help you with the transition:

💬 Since this roadmap can cover much more, I have definitely missed something important, please let me know in the comments.