Text Mining Tutorial using Word2Vec (Continuous Bag of Words)

Continuous Bag of Words also known as CBOW is another Word2Vec technique used to find the relationship among the keywords. It is actually the opposite of the previous technique skip gram model. We will find out how it is different and how it impacts the performance on the same dataset.

In Continuous Bag of Words, the context predict the required word, in other words more than one word predicts a label.

For example: I love tensorflow

The words I and tensorflow will predict the centered word love in the same fashion.

We will use the same dataset a discussed in the previous model, even the code will be similar to the previous one. It will be easy for you if you know how the skip gram model works.

Since we are going to use several words to predict a single word, we will need to use a compress function from library itertools, import it by using the following line:

fromitertools import compressThe generate_batch method as told is the main part, we need to modify it for CBOW model. First, more than one word will predict, so the batch will be of 2D array, the shape of batch will be (batch_size, num_skips), where num_skips here is the number of words used to predict.

Replace generate_batch method with the following:

def generate_batch(batch_size, num_skips, skip_window):

global data_index

assert batch_size % num_skips == 0

assert num_skips <= 2 * skip_window

batch = np.ndarray(shape=(batch_size,num_skips), dtype=np.int32)

labels = np.ndarray(shape=(batch_size, 1), dtype=np.int32)

span = 2 * skip_window + 1 # [ skip_window target skip_window ]

buffer = collections.deque(maxlen=span)

for _ in range(span):

buffer.append(data[data_index])

data_index = (data_index + 1) % len(data)

for i in range(batch_size):

mask = [1] * span # setting 1 w.r.t window size

mask[skip_window] = 0 #setting 0 for word to be predicted

batch[i, :] = list(compress(buffer, mask)) # gives all surrounding words

labels[i, 0] = buffer[skip_window] # the word at the center

buffer.append(data[data_index])

data_index = (data_index + 1) % len(data)

return batch, labelsAlso change the shape of train_dataset:

train_dataset= tf.placeholder(tf.int32, shape=[batch_size,num_skips])Since we have 2D train_dataset, we will also need to modify the lookup:

for j in range(num_skips):

embed = tf.nn.embedding_lookup(embeddings, train_dataset[:,j])The rest remains the same. Get the full code of CBOW model from github repo.

One more thing, the plot method is simply plotting the graph that you see in both the versions. You will notice a difference in both skip-gram and CBOW graphs.

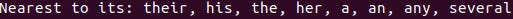

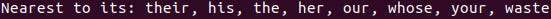

The following is the output obtained:

CBOW

Skip-Gram

If you notice, the CBOW didn’t work well with small dataset because on the above dataset it is relatively slow as compared to Skip Gram Model. Even after 100001 iteration the nearest word list contains some irrelevant words.