Testing Data in Apache Spark

How to efficiently test large scale Spark jobs, using open source libraries.

We all the know the benefits of testing software components through different types of tests like unit, regression, integration testing etc. However, testing datasets at scale could be challenging, Apache Spark is a distributed engine that can compute Peta Byte of data, however depending on what type of test we are doing, Spark test could end up very time consuming and especially hard to manage if not planned before hand.

Testing has always been an important component of Data lifecycle, Data Engineers perform test in different ways which we will discuss later, however one of the biggest challenge is there is not enough standard practices on how to perform testing on data compared to software engineering which has lot of offer in this space. In this article, I will share some of the techniques and practices I have used to be more efficient at testing Spark Jobs.

Related reading on the importance of Testing: Automated Testing

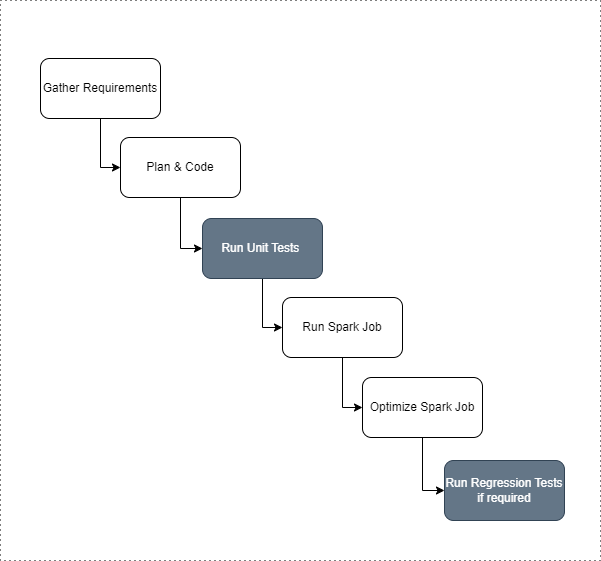

Workflow

Typical high level workflow showcasing the tests looks something like this for a Data Engineer:

The workflow does not take into account few important items like the feedback loops with stakeholders.

This cycle continues and here we see two common tests we need to do:

Unit Tests

Unit tests as we know from Software Engineering are test that test individual functions in an isolated environment, these tests can be performed locally or in a remote Pipeline. In Data Engineering, the unit can be defined as a function or a Spark job and it could be time consuming depending on input size of data, Spark configuration.

Typical Challenges with Unit Tests are:

Sample Data

Preparing a sample data for unit test could be quite challenging. Making sure the data is good enough to use it in tests. It is better to invest in building an internal package that can build sample datasets using some defined configurations, or using third party library like faker. Using production data to create fake datasets is likely to give you the closest possible representation.

Test Cases

Covering all cases and behaviors is pretty hard to accomplish. It highly depends on test data preparation but also writing many test cases could become cumbersome, one way to overcome this is to write test at a Spark job level rather than function level, this is likely to be easy and more efficient with a downside of that it becomes a bit hard to debug.

Unit Testing Library

On scale, investing time to build internal tool to avoid duplicate test code is ideal, without this you could end up with unmaintainable code.

Test are still better with repeated code as it makes them easy to understand, however there should be some limit to it.

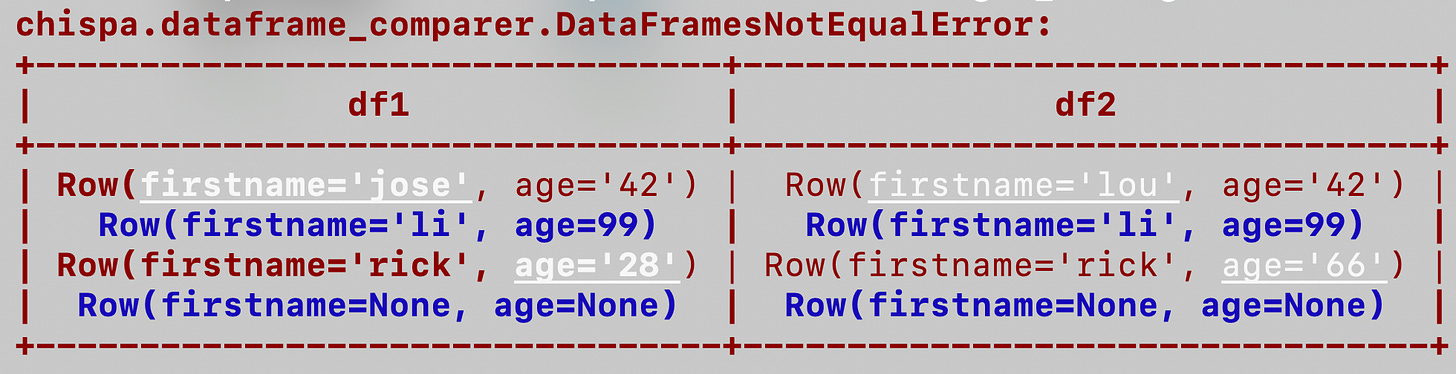

Some common functionalities should be shared, leveraging existing spark testing library is another great option e.g. Chispa.

Chispa is a PySpark library which comes with lot of boiler plate testing code along with beautiful error messages, e.g.

Regression Test

Regression test are typically done on end to end to make sure there is no bug in data, e.g. post migrations comparing old vs new generated datasets, they should match apples to apples if no logic was changed. These test can be performed locally depending on size, however at scale clusters are required with automated scheduling.

Typical Challenges with Regression tests are:

Local Regression Test

Running regression locally with big datasets is quite impossible, local will work if your dataset is small enough to fit in the available memory of your PC, however its better to utilize cloud computing like EMR clusters to perform regression, which may cost $ but its convenient and close to production environment. Automating through orchestrator can also help with wait times, like running comparison jobs overnight.

Runtime

Regression test are known to take time, it runs across the whole data and performs comparison between two datasets, this could end up taking lot of time. Spark Optimization comes into picture, Spark job may be optimized, but test needs to be optimized as well, considering tests run back to back. Learn how to optimize spark jobs.

Regression Testing library

Similar to unit test, having a robust testing library can avoid many pitfalls that comes with testing. If you are looking to perform regression on multiple datasets, then using library is better than just duplicated scripts for each test, similar to PySpark based one, there is a Scala Spark library as well spark-fast-tests by same author Matthew Powers.

The library is similar to Chispa but for Scala supporting both dataframe and dataset APIs. Some of the functionalities that are useful with pre optimized spark are:

Dataframe:

assertSmallDatasetEquality

assertLargeDatasetEquality

Dataset:

assertSmallDataFrameEquality

assertLargeDataFrameEquality Further, custom logics can be built on top, like columns removal, additions etc.

Both chispa and spark-fast-tests serves similar purpose in different language, however the former has more beautiful error messages, hoping to see something similar in Scala soon. :)

Conclusion

As you can see, doing testing with spark the proper way gives all the benefits, either its through the existing open source library by MrPowers or building your own one, but this is the best way, along with making sure the data we produce for unit test is good enough to cover all test cases.

Share what approaches and libraries do you use and what other test you are performing for your Spark Jobs. If interested in more Spark content, check out this page: Spark.