Terraform vs Asset Bundles for Databricks Workflows

Sharing the experience of moving from Terraform to Asset Bundle for Databricks workflow deployment, covering challenges and benefits.

Today, I’ll share my experience on why we transitioned from Terraform to Databricks Asset Bundles for workflow management.

I will cover:

Challenges and pain points with Terraform

Benefits of using Asset Bundles

In short, Asset Bundles provide a user-friendly approach to deploying Databricks resources, while Terraform requires more technical knowledge, setup, and maintenance.

Terraform

When we migrated to Databricks, we deployed all resources using Terraform, which worked well for the infrastructure but didn't provide the best workflow experience.

At the time of migration, Team was comfortable with Terraform and DAB was very new.

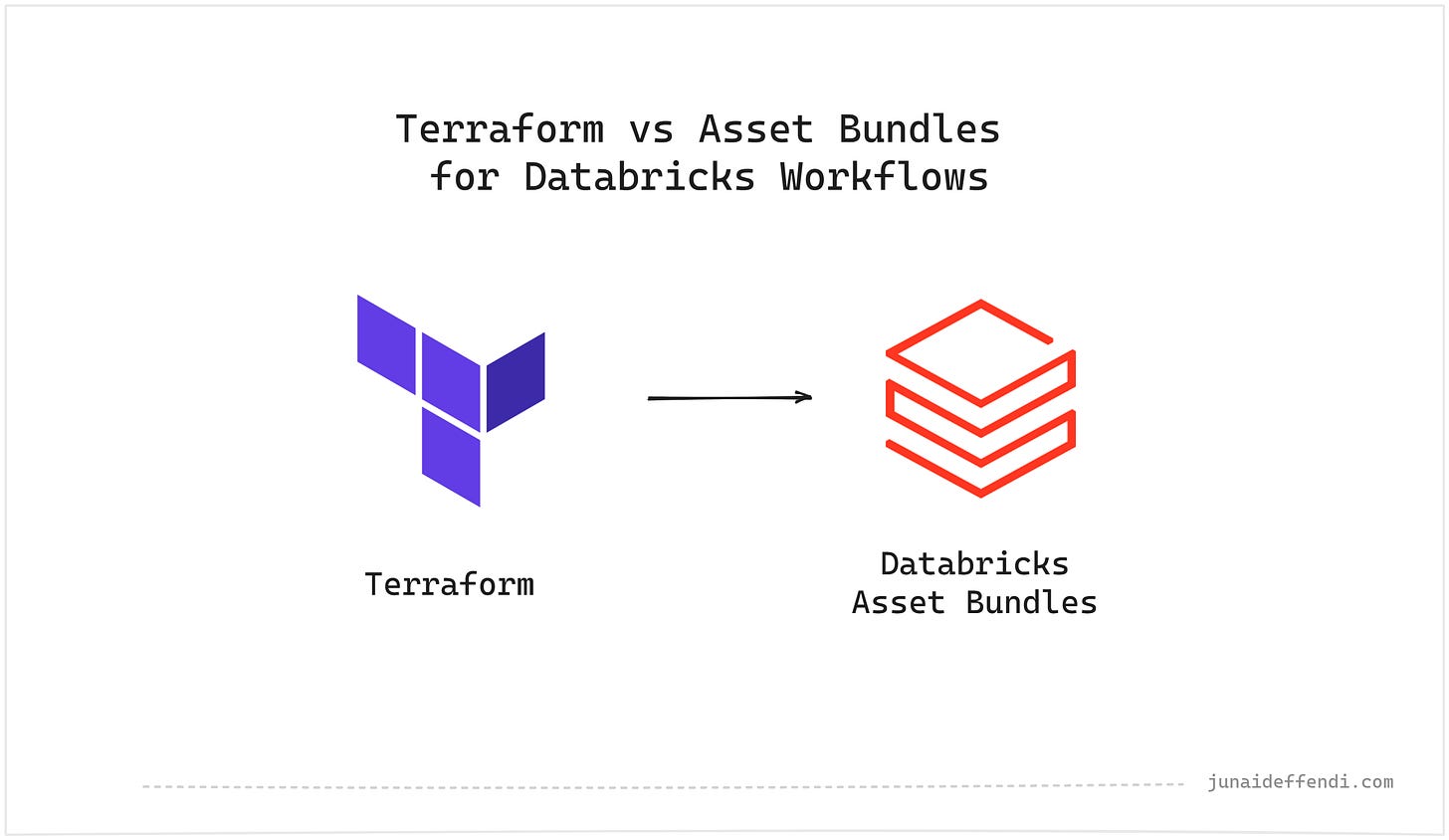

Below is the dev cycle we used to have with Terraform, three repos involved with three approval process.

This design is not scalable if you have multiple envs.

Problems:

Keeping the two Terraform repositories in sync was challenging, resulting in duplicated work (could be improved with a Terraform repo redesign).

Deploying to dev for testing caused disruptions due to TF state issues, such as restarting the general-purpose cluster used by other teams.

Development cycle slowed by multiple codebases and review processes, hindering developer productivity.

Secured environments like GovCloud require additional duplicated work and maintenance.

💡Decoupling makes deployment process painful, but changes to workflow are independent, meaning no need to worry about source code.

Asset Bundles

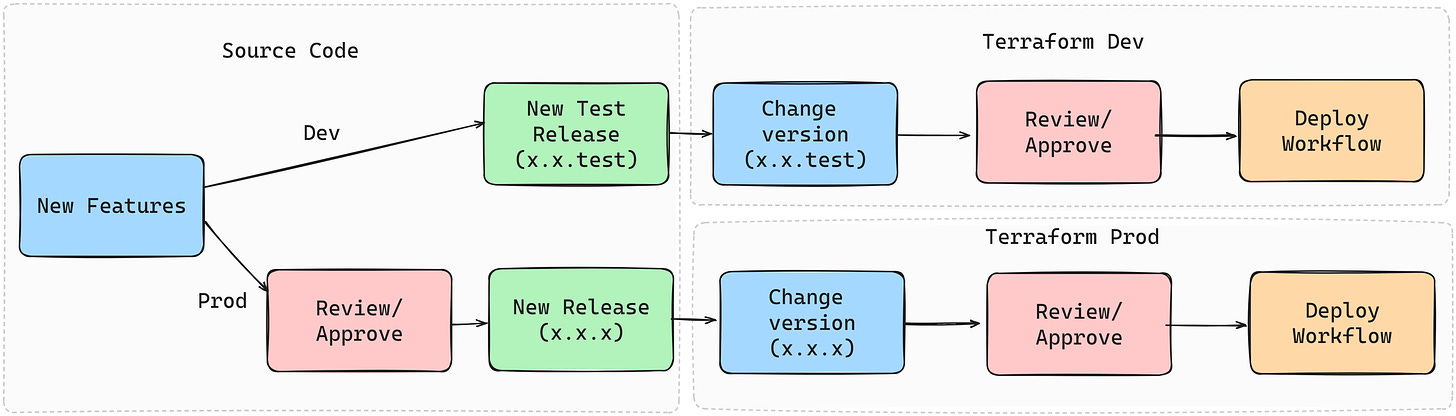

With DAB migration, this is how dev cycle looks like:

If you compare this with the Terraform approach, it eliminates the Code Change (Blue) and Approval (Red) steps.

Single end-to-end repository simplifies maintenance.

Developers can work independently and conduct end-to-end testing in development before requesting reviews.

Shared workflow definitions across environments with options to parameterize environment-specific details.

Easier onboarding as no Terraform knowledge is required, Data Scientists can also independently work easily.

Switching from console to DAB is simple with copy-pasting YAML/JSON, eliminating the need for Terraform conversion.

We could have achieved this coupled approach even in Terraform but it requires additional setup and Terraform expertise.

💡Coupling simplifies deployment process, but workflow only changes require additional setup in the CI/CD pipeline to skip source code related steps like test, release etc.

In our case we just created new workflows and removed from TF, however if you like to keep the same workflow to preserve the job id and job run history, you can use the two step process:

Manually bind the workflow with DAB, bind command docs.

Manually remove the workflow from TF state before removing from the TF repo.

I would definitely recommend to consider DAB over Terraform as it makes easy to deploy, maintain and requires less technical skills.

Asset Bundles are not only used for Workflows, they support General Purpose Cluster, Notebook, Delta Live Tables Pipelines and much more, read article here.

📖 For recent release: Databricks Asset Bundles feature release notes

If you like to share something, please leave a comment.