Self Serve Data Engineering Pipelines

How to build self serve pipelines for data scientists and analysts.

The Engineering landscape has always been changing rapidly, and recently has been moving towards enabling others and providing self serve tooling, if you look at software engineering from a broader perspective, there are numerous tools already out there that follow this approach. One recent successful example is Platform Engineering, where the goal is to build a platform to enable teams to self serve their needs through Infrastructure as Code (e.g. Terraform). This has proved to be a big unblocker for the company in the long run, without this it is very DevOps dependent process, and challenging especially with lean DevOps Team to accommodate all the requests.

A similar approach can be adopted in Data Pipelines, as someone said Data Engineers should be writing frameworks, meaning pipeline can be treated as a framework and its operation can be handed over to the domain specific Data Teams. This could be debatable, however finding the right balance is ideal, not all pipelines can follow this, highly depends on the design and requirements.

The main idea is to enable teams to take full ownership and control with the help of tutorials, workshops and documentations, once it's unlocked it can have an exponential impact across the company.

In this article, we will cover a self serve pipeline architecture and will dive deep into what functionality should be considered to make it easy to use for the end users. Typical end users of such pipelines are Data Scientists, Data Analysts or sometimes even Data Engineers.

Pipeline Design & Requirements

Designing a self serve pipeline should meet some basic criteria otherwise it could end up in an over engineered solution.

In typical world, building pipeline per source is very common, this works really well when standardizing transformation is hard and logic is very customized per source. However, if you have following scenarios;

You have multiple sources but same datasets

Your sources have a common or similar schema

Your sources have somewhat similar business logics

Your sources increase rapidly (per Dag approach will be too hard to maintain)

Then going with one pipeline approach is ideal as its easy to maintain and scale. It can be driven by a config and converted into a self serve pipeline with concurrent runs and dynamic override ability provided by the orchestrators like Airflow. We will learn more about this in detail in next sections.

Concurrency can play a vital role otherwise your runs might end up in queue depending on how long it takes.

Architecture

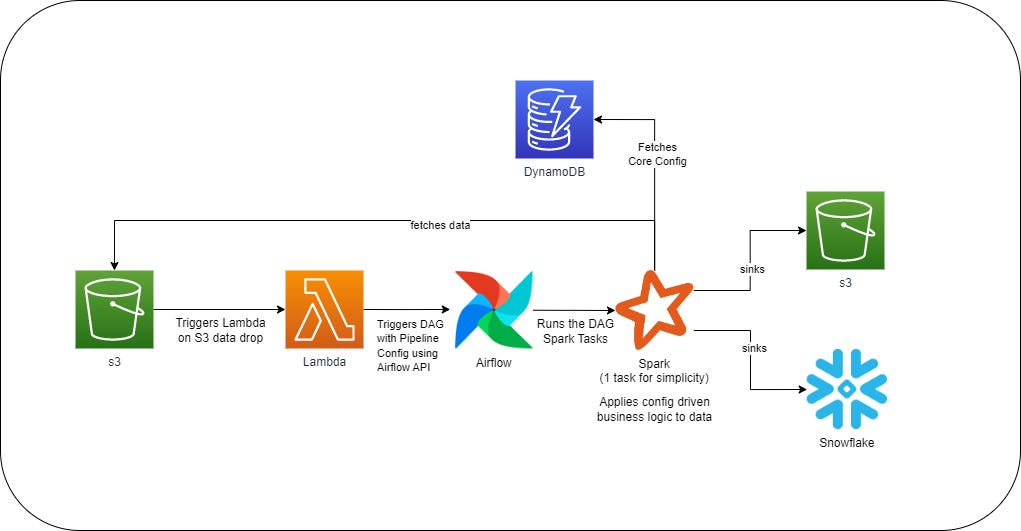

A very high level self serve pipeline architecture can look like this using the modern data tools.

S3: For raw and processed data storage

AWS Lambda: For pipeline trigger

Airflow: For orchestration

Spark: For data processing

DynamoDB: For storing core config (Business Logic)

Snowflake: For processed data storage

Functionality

Instead of looking it from the components perspective, I will dive from the functionality that is needed for self serve pipelines.

Pipeline Triggers

External triggering of a pipeline requires some kind of endpoint support, Airflow provides Rest API endpoint which can be used to trigger the DAG.

Triggering in different environments:

For production use case, AWS Lambda can play an important role in automating such workflows like triggering a pipeline on a file drop. (Challenging to use Airflow Sensors in case of Dynamic Pipelines)

For Data Science experimentations, pipeline can be triggered directly from Jupyter Notebooks.

For Dev, pipeline can be triggered from the UI or from local machine via rest API.

Airflow can be triggered like this:

curl -H "Content-type: application/json" \

-H "Accept: application/json" \

-X POST \

--user "${USER}:${PWD}" \

"${ENDPOINT_URL}/api/v1/dags/{dag_id}/dagRuns"\

-d '{"conf": {}}'conf is the pipeline config we will talk in next section.

Read more: Airflow Rest API Docs

Pipeline Configs

Having a single and dynamic Pipeline means we need ability to overwrite certain aspects of the pipeline on runtime (upon trigger), this is possible if an Orchestrator supports and in our case Airflow supports ability to pass in Dag configs, which can be seen per run in Airflow UI (Dag Runs Page).

A typical parameters that are needed to overwrite per pipeline run are:

Source, e.g. DataLake (S3) or DataWarehouse (Snowflake)

Extra Arguments, e.g. passing trigger date, source identifiers, variables needed in pipeline

Core Configs, multiple options [Discussed Later]

Destination, e.g. DataLake (S3) or DataWarehouse (Snowflake)

This could further be simplified and abstracted with additional layer on top of it, like providing functionality to replace a complete task code for complex processing, or even the whole task docker image if possible.

Core Configs

A self serve pipeline has to be config driven, because the pipeline users will be making changes to business logic involving, schemas, transformation, mappings, aggregations and data quality. The pipeline operators in most cases are Data Scientists which are experts in the data and domain and have the ability to decide on business logic.

Data Engineers in initial phase should take this requirement and build a robust config driven solution and data models which are extendable and easy to operate. One part this solution is the config format and storage. These configs can be stored in a separate repository in the form of YAML or JSON files, decoupled from the pipeline code, few options to store configs are:

S3 as files

Key-Value Store such as DynamoDB

There are few ways to handle a config:

Pass config as part of the Airflow Pipeline (could become a clutter and hard to maintain).

Pass config path and read it in Spark Jobs.

Pass a source identifier to load within Spark Jobs.

Lets dive into some types of standard configs and example:

Schema Config

Config to handle destination schema and types. Making it easy to add or remove columns.

columns:

- <column_name>

type: <data_type>Transformation Config

Config to handle core logic, transformation and mapping, or even aggregations and conditional column generations.

column_mapping:

<given_column>: <mapped_column>

value_mapping:

<column_name>:

<given_value>: <mapped_value>

column_generations:

<column_name>: <column_name_A> + <column_name_B>

<column_name>: <if/else> ...Depending on how much control you like to give via config. Aggregations and conditional column generations are pretty challenging to standardize.

Data Quality Config

Configs to handle column level checks, row level filtering, conditional checks etc.

columns:

- <column_name>

validations:

- <validation_name> # should_not_be_null

- <if/else>

I will be writing a blog post on a config driven Data Quality Framework on top of Great Expectations in the near future. Stay Tuned!

Depending on design of pipeline and tables, these configs can be per source, per table or just one combined per pipeline.

Further, it is very important to have a robust system underneath the configs to be able to easily accommodate changes.

Data & Config Processing

In Data and config processing, framework and language is very important, picking a wrong language might make it challenging or even impossible for example, SQL (great for transformations, but very hard with dynamic pipelines). Any programming language is good to provide dynamic, robust, extensible solution with external system integration like DynamoDB driven through a config.

Python and Scala are common choice among Data Engineers.

Depending on scale, a proper distributed compute engine may be required as well. Apache Spark is a good candidate for processing massive amount of data especially performing aggregations, while Apache Beam and Flink are great for row level transformations. However, on small scale distributed computing would be an over kill.

Read More: Spark Best Practices

Similarly, on the Lambda processing side, a light weight solution should be good enough which can prepare a pipeline config and submit to Airflow.

Conclusion

This self serve approach frees up Data Engineering time and allows them to focus on more impactful projects and pipelines (depending on the company's definition of a Data Engineer), this works for certain use cases that are easy to abstract through configs.

The goal of Data Engineers changes from handling pipeline operations to focus on providing technical support and pipeline enhancement.

It still makes sense for a Data Engineer to own the operation of a pipeline that involves custom and advance logic specific per dataset that requires technical expertise.

I hope this was interesting to the readers. Share your pipeline experiences in the comments especially if you are using a different orchestrator like Mage.