Securely Share and Automate File Transfers with AWS Transfer Family & Terraform

Learn to deploy a secure, automated SFTP server with AWS Transfer Family & Terraform. Set up restricted users, enforce SSH & MFA, and leverage workflows for automation—scalable, secure, and efficient.

At some point in their career, every Data Engineer will work with SFTP servers—either fetching files from a vendor-provided SFTP or hosting one for vendors. Managing and securing these transfers can be challenging, especially at scale. AWS Transfer Family simplifies this process by providing a fully managed, scalable SFTP solution.

Today, we’ll explore how to set up and secure an SFTP server using AWS Transfer Family. We’ll cover:

Deploying an SFTP server

Setting up users with read & write restricted permissions

Securing onboarding with SSH (MFA approach shared)

Leveraging Transfer Family Workflows for automation

By the end, you’ll have a secure, scalable, and automated solution for managing file transfers efficiently.

⭐ SFTP works seamlessly with S3 or EFS.

Prerequisites

Before we dive into AWS Transfer Family, following must be created:

Create S3 Bucket for storing the files

Create Lambda Function for downstream processing

Create IAM role with access to S3 and Lambda for the Workflow

Create IAM role with restricted access to S3 for SFTP user

Restricting at the prefix level e.g. <bucket>/username/

Creating SFTP Server

Creating a managed server with Public endpoint and workflow details.

resource "aws_transfer_server" "sftp_server" {

domain = "S3"

identity_provider_type = "SERVICE_MANAGED"

endpoint_type = "PUBLIC"

protocols = ["SFTP"]

workflow_details {

on_upload {

execution_role = <workflow_iam_role_arm>

workflow_id = aws_transfer_workflow.sftp_workflow.id

}

}

}<workflow_iam_role_arn>: Provide the role arn that has S3 and Lambda access

Creating SFTP User with SSH Access

Creating a user with S3 directory mapping, this allows user to only have the required permission to that prefix.

resource "aws_transfer_user" "sftp_user" {

server_id = aws_transfer_server.sftp_server.id

user_name = "<username>"

role = <sftp_user_iam_role_arn>

home_directory_type = "LOGICAL"

home_directory_mappings {

entry = "/test"

target = "/<bucket_name>/<username>/"

}

}

resource "aws_transfer_ssh_key" "sftp_ssh" {

server_id = aws_transfer_server.sftp_server.id

user_name = aws_transfer_user.sftp_user.user_name

body = <ssh_public_key>

}<username>: Provide the user name<sftp_user_iam_role_arn>: Provide the role arn that has S3 access restricted access<bucket_name>: Provide bucket name<ssh_public_key>: Provide the public key (generate using this guide)In real world, vendor can generate key pair on their end and send over the public key.

For advance authentication mechanisms like Multi Factor (MFA), you can leverage a Lambda function as recommended by AWS.

📖 Recommended Reading: Implement multi-factor authentication based managed file transfer using AWS Transfer Family and AWS Secrets Manager

Test connection

Using SSH based approach it is pretty straight forward to log in to sftp server.

ssh -i <ssh_private_key> <username>@<host_address><ssh_private_key>: Provide the generated key from previous section.<username>: Provide the user name from the previous section.<host_address>: Provide the host address that can be found from the AWS sftp transfer family console.

For non technical users, Transfer Family offers a managed web based interface for file transfers. Explore more here.

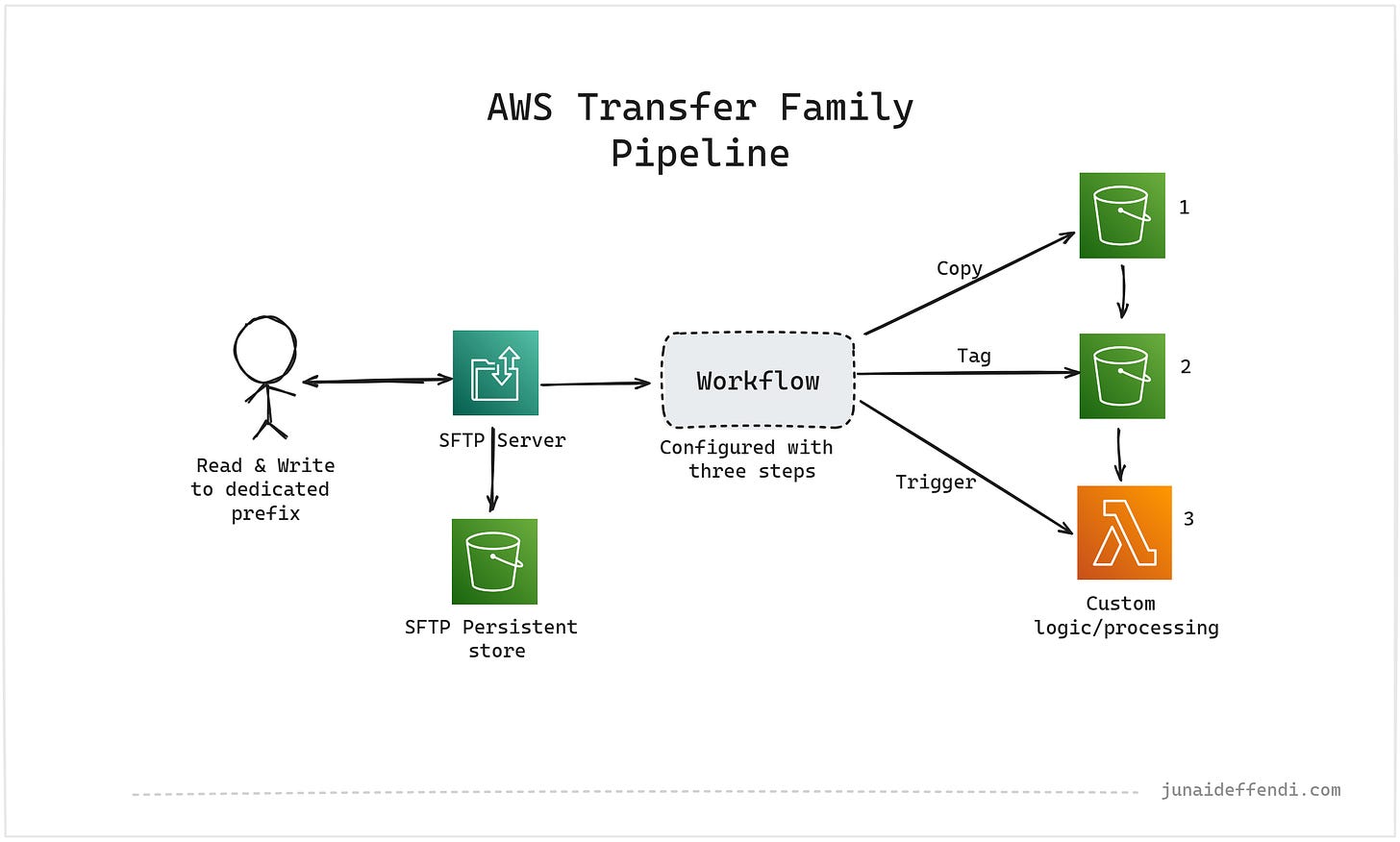

Setting up Workflows to trigger downstream jobs

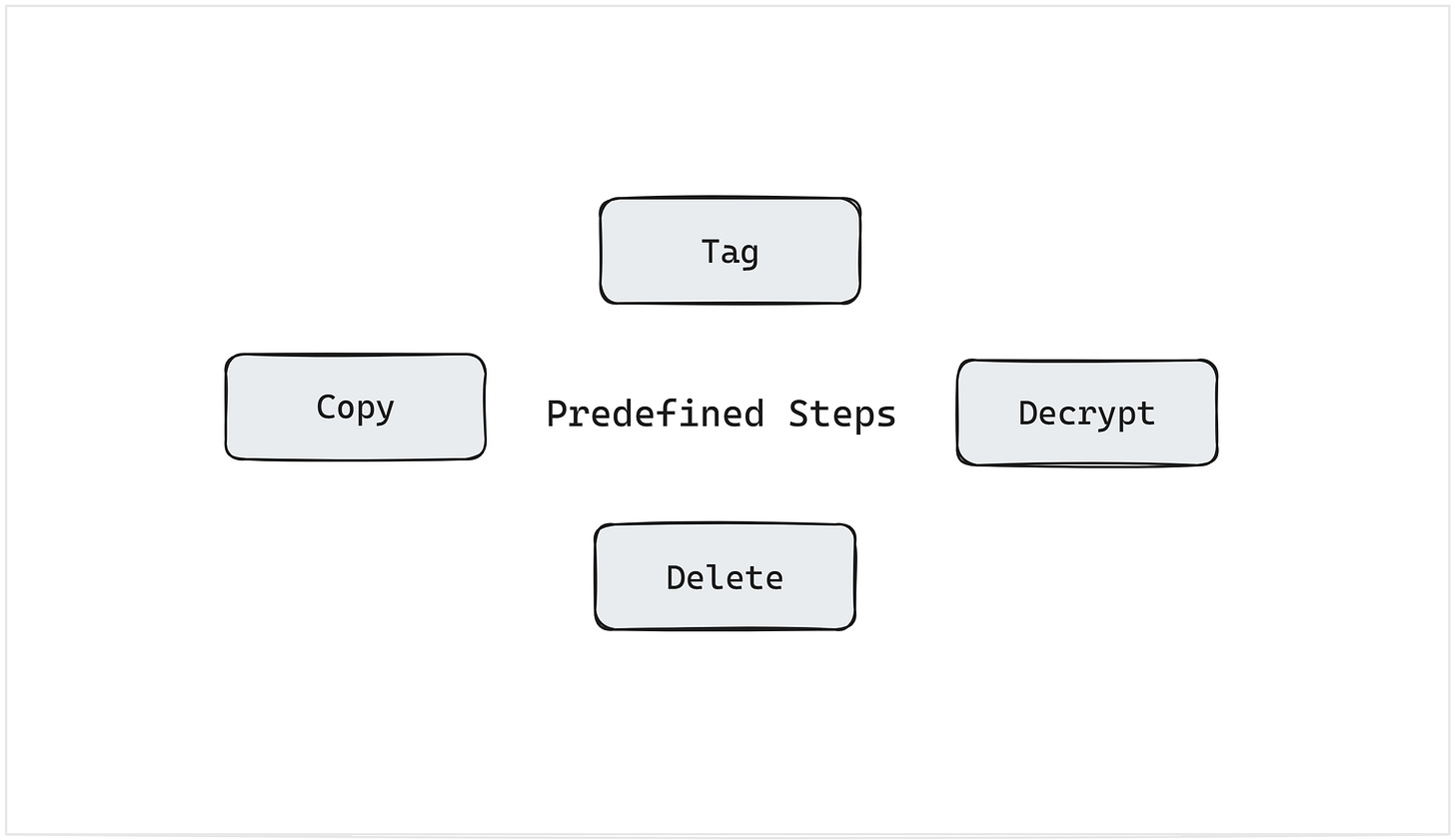

Workflows are great pre configured series of step that are part of the Transfer Family ecosystem. It allows you to perform several fully managed functionality on the files that you receive from SFTP right away.

In our case, we do three steps:

Copy to internal S3 bucket with limited access, its recommended since the SFTP backed S3 has external access through SFTP.

Tag S3 objects depending on your usecase

Trigger a Lambda function for downstream processing and custom logic. e.g. sending email notification.

📖 Recommended Reading: How to return the response to workflow from Lambda Function

resource "aws_transfer_workflow" "sftp_workflow" {

steps {

type = "COPY"

copy_step_details {

destination_file_location {

s3_file_location {

bucket = <bucket_name>

key = <prefix_path>

}

}

overwrite_existing = "TRUE"

}

}

steps {

type = "TAG"

tag_step_details {

tags {

key = <key>

value = <value>

}

}

}

steps {

type = "CUSTOM"

lambda_step_details {

target = <lambda_function_arn>

}

}

}<bucket_name>: Provide the destination bucket name<prefix_path>: Provide the destination prefix path for the object<key> & <value>: Provide the key and value for tagging<lambda_function_arn>: Provide arn for the Lambda function

First step reference the original file while the corresponding steps automatically reference the file from previous step, it can be overwritten if needed.

Alternatively, you can skip the workflow setup by handling copy and tagging directly in your Lambda function, triggering it via SNS on an S3 drop. However, this approach falls outside the Transfer Family.

I hope you found this article useful. With it, you’ve now learned how to develop a secure, scalable, and automated solution for efficiently managing file transfers in the AWS cloud environment.