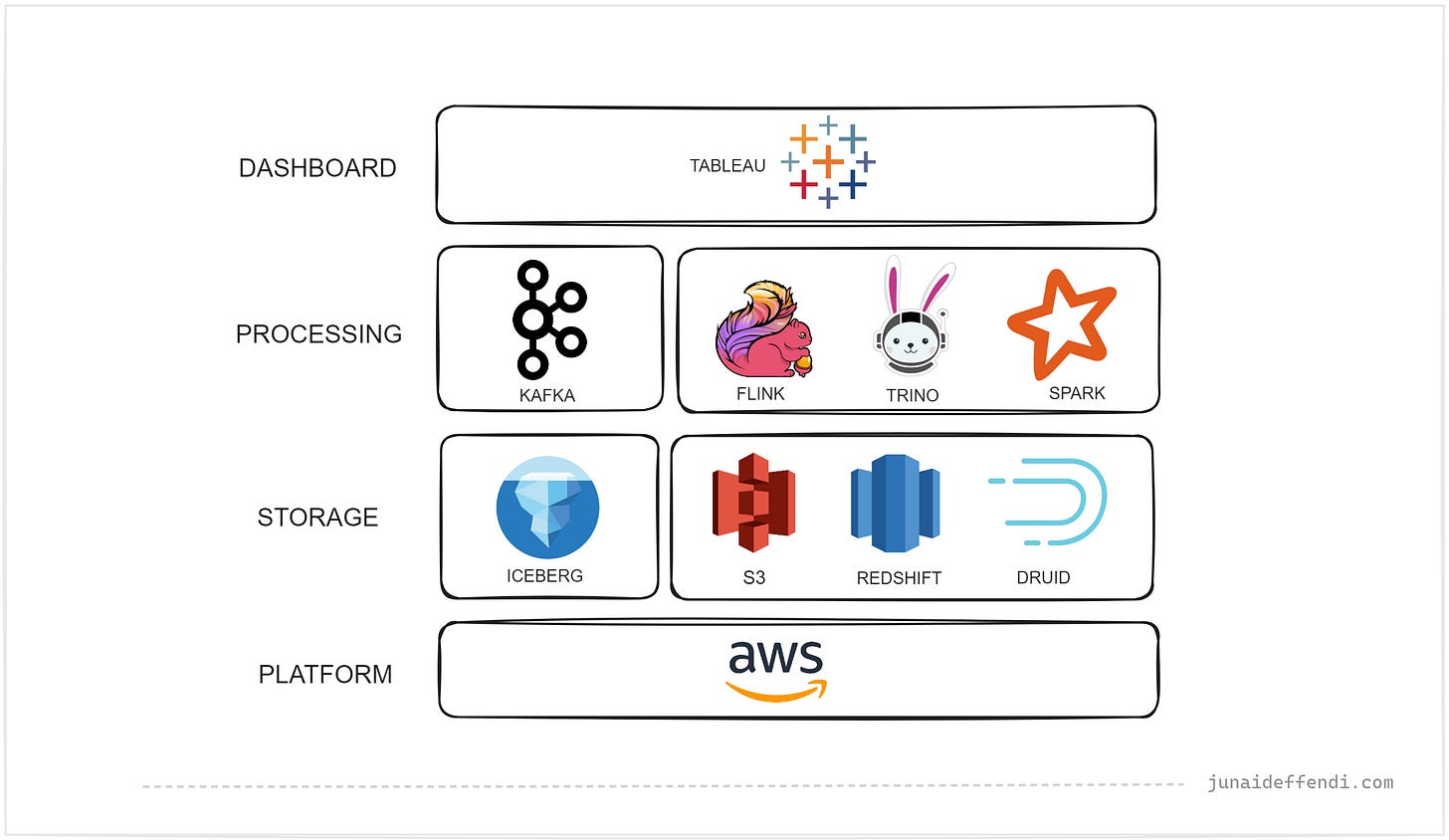

Netflix Data Tech Stack

Learn about the Data Tech Stack used by Netflix to process trillions of events every day.

Netflix handle massive scale, from event data in streams to data at rest in the warehouse. Netflix data stack is pretty solid, mostly built on top of open source solutions. The data stack processes trillions of data points everyday while the scale of data at rest is in hundreds of Petabytes based on NetflixTechBlog. Today, lets dive into the tech stack from Data Engineering perspective.

Content is based on the NetflixTechBlog and my conversations with Netflix Engineering Teams.

Lets go over high level into each component:

Platform

AWS

Netflix is one of the biggest customer of AWS, they leverage AWS as their cloud platform and utilizes lot of AWS services from front end to back end, from online to offline.

Storage

Iceberg

Netflix use Iceberg open table format to provide ACID capabilities to the Data Lake along with lot of other benefits, time travel and data compaction.

S3

S3 is core part of Netflix tech stack, its the place where most data ends up. S3 and Iceberg works together to provide a seamless Lakehouse architecture.

Redshift

For traditional Data warehousing, Netflix relies on the AWS Redshift service, its not only used for storage but also for querying data.

Druid

Netflix use Druid to solve real time analytical use cases. It seamlessly connects with Kafka to provide real time insights. Read more about how Netflix leverage Druid.

Processing

Kafka

Netflix upstream architecture is event driven, and to handle the millions of events per second, Netflix use open source Kafka.

Flink

Consuming data from Kafka requires a special stream processing engine such as Flink. Netflix use the open source Flink to do real time data manipulations. Learn more: Data Movement at Netflix

Trino

Trino is used as SQL layer on top of the Lakehouse. This provides data consumers ability to write SQL to read large scale data directly from s3.

Spark

Netflix use Spark for batch processing workloads, typically Spark batch jobs read and write from the Lakehouse.

Dashboard

Tableau

Netflix is probably one of the biggest customer of Tableau. Main use cases are dashboards and visualizations.

Wondering why there is no data orchestration? Netflix has built in house data and ML workflow orchestration called Maestro.

Let me know in the comments if I missed anything.

Related Content:

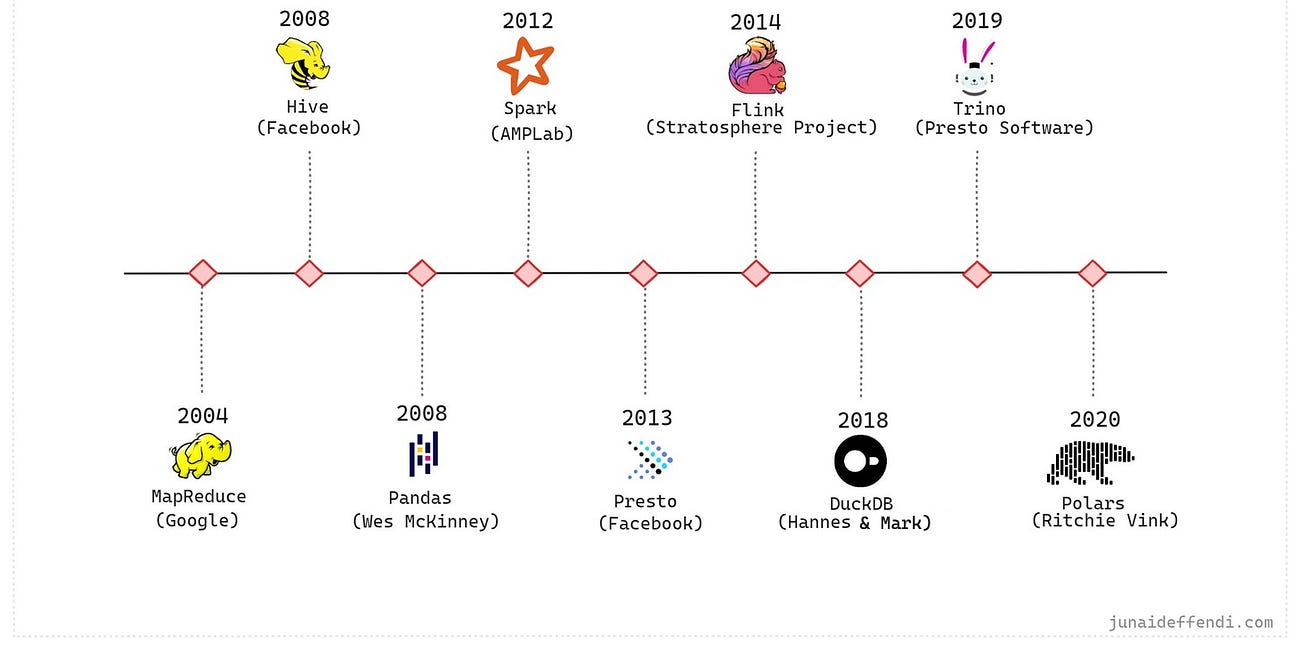

Data Processing in 21st Century

Lets talk about the open source data processing technology from the 21st century, covering distributed frameworks to single node libraries, mature and recent development. Before these technologies, the common technique to process data was to glue together custom solutions, e.g. scripting and piping was one approach as menti…

Content from community:

Thank you. This is a nice summary of Netflix’s data stack. Can you say anything further as to why Netflix uses AWS Redshift at all? Was their original data warehouse before they adopted lakehouse on Iceberg? Or how do they decide to use Redshift over Iceberg/Trino/Spark? In their ecosystem, do consumers such as Tableau or data analyst (or whatever) consume all data via Trino? Or do some directly engage it using Redshift?

Overall this summary makes perfect sense to me, with the exception of Redshift — I just don’t get it’s purpose.

a great analysis... .thank you. Curious as to their security stack and how they reduce their attack vectors. Could you shed some light on that please?