Finally, I got time to try out the tool Mage after hearing and seeing lot of hype about the tool that its going to replace the Apache Airflow. I have been keeping eye on this tool for quite a while, reading about the tool and also following the contributors on platforms like LinkedIn.

This article I am going to talk about my experience with Mage, I am not going to get into the weeds of the tool but will provide my high level experience especially as a Data Engineer I have used many tools in the past and will try to put some lights on the scalability aspect.

This was a complete personal project so I could not test some of the advanced features and opinion is based on reading.

We will be discussing:

What is Mage

Mage Features

Mage Scalability

Experience & Opinion

Conclusion & Feedback

Mage

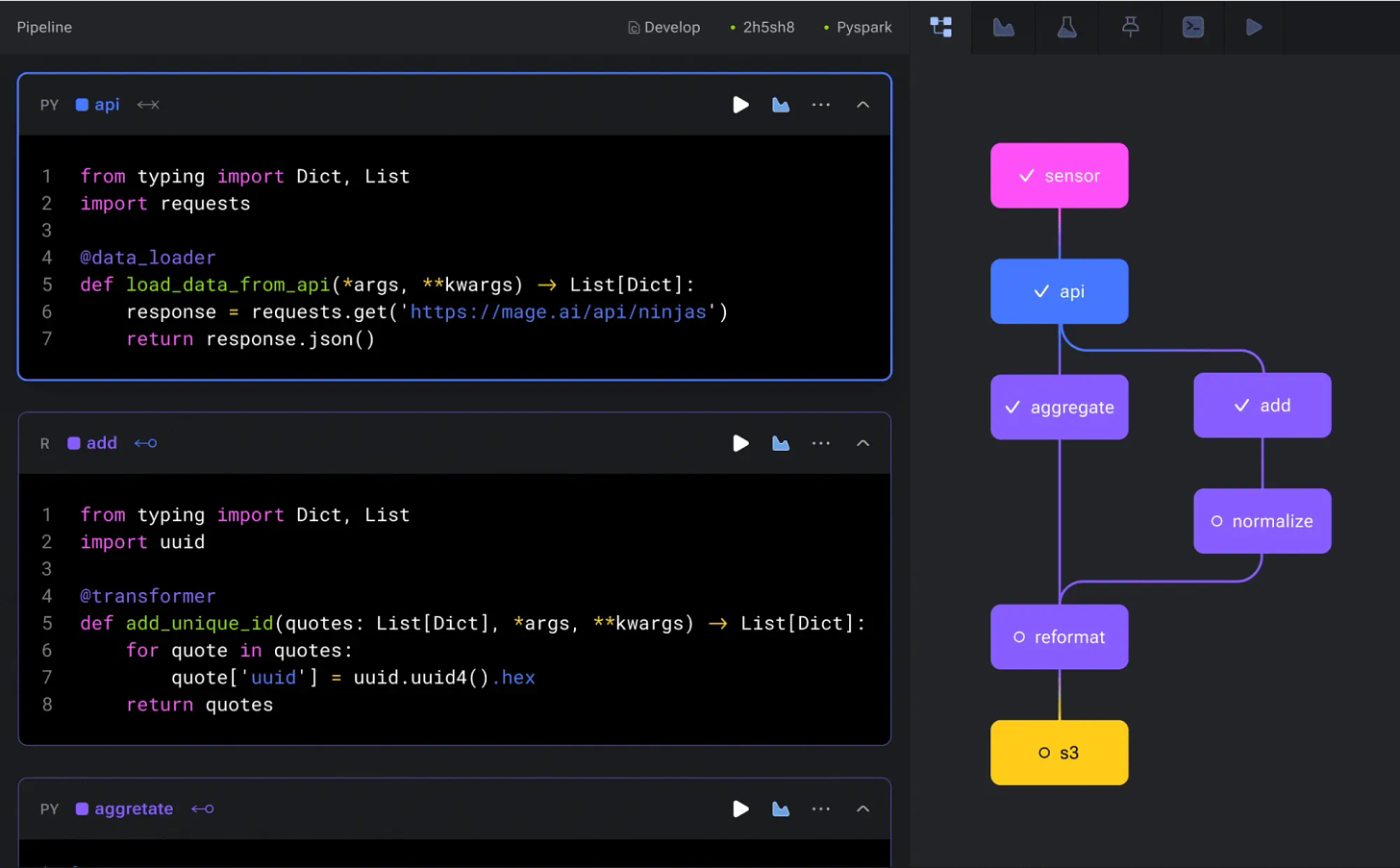

Mage is all in one tool that enables you to perform ETL, integrate multiple data sources, orchestrate and monitor pipelines with built in native notebooks to update code on the fly in an intuitive user interface.

Mage claims to be a modern replacement of Apache Airflow.

The article will use terms from Mage, for reference please go through this document.

Mage Features

Let's look into some of the unique Mage features that I observed and consider worthy to mention and talk a bit about. As mentioned, I am not going to dive deep into each of the component like how to setup etc, Mage docs are pretty good.

Setup

Mage is very easy to setup, get it up and running, with just few commands you can start working on it either as a python package or through docker. Even the cloud and multi cloud setups are not that hard, just requires some initial investment on the infrastructure side e.g. Kubernetes.

Multi cloud deployment setup can be very vital for companies where working with data locally is restricted due to security and compliance.

Usability

At first, Mage UI is intuitive, it's very easy to navigate, use and understand, I did not really had to search or go through documentation to understand, part of the reason could be my experience in general.

Config Driven

Mage in development environment could be used through UI, but for production its better suited to be used through its Yaml based configs, depends on many factors like use cases, team size etc, discussed in detail in later section. These configs are super neat, they can reuse components and make it part of a new pipeline.

Modularity

Mage is super modular, meaning each and every block you use is portable and can be reused elsewhere. Some common blocks are loader, transformer, exporter.

Templated

Mage uses a templated approach where they hide away lot of complexities and giving end user easy to use templated code, e.g. loading data from s3.

Testing

Built in testing makes lives easy, your tests sits alongside the logic, meaning you can run your test right away. This give confidence and no manual framework or testing is required.

Visualization

Think of Mage as Jupyter Notebooks where you can run the code piece by piece and get the results, meaning you can see the data and also visualize in the form of graphs and charts.

Integrations

Mage support seamless integrations with many tools like DBT, Great Expectations, etc. This is very powerful, and no manual or custom setup is required. You may also like: orchestrate DBT with Airflow.

There is lot more that Mage has to offer, but I would avoid sharing those topics in this article, some of the other features are authentication, logging, monitoring etc.

Mage modular, portable and templated block is the real deal.

Mage Scalability

Being a Data Engineer, one of my biggest concern was how to use Mage in the scalable way, I had some questions and concerns for which I pinged Tommy (Mage CEO) to understand more about how Mage handle scalability.

Following are some points that relate to scalbility in one way or another:

Mage supports multiple ways of running DBT, single model or multiple models with upstream or downstream dependencies using cli commands.

Mage has multiple ways to setup the deployment, simple way is to just connect git

mainbranch with the production instance and it automatically syncs up.Mage support production instance to be read only using an environment variable.

Mage runs everything on same container by default, they support running each block in its own pod using

k8 executorto achieve scalability.Mage uses local disk by default for storing intermediate results, they support external storage systems like s3 for scalability.

Mage does not support python dependency on pipeline level, having dependency per data block can also be a big plus. Current limitation could cause dependency issues at scale.

Mage seems not to support a way to run dockerized application as one of the data blocks at the moment.

Mage spark setup seems a bit disconnected and requires some expertise to setup, especially in production spark operations like spinning up EMR, or running spark on K8.

Mage does not support Scala Spark 😞.

Mage PySpark Executor allows to run the pipeline in single EMR unlike running each data block in its own spark cluster, this could cause problems for very compute intensive parallel workflows.

Some of the above items can be found on their Roadmap. Mage Team can also provide technical support if needed.

The scalability issues could not be a concern for data teams as they mostly follow the ELT extract -> load -> transform approach which uses the powers of data warehouses like Snowflake.

My Opinion on Mage

As a Data Engineer, I have used quite a wide range of tools to solve the problems that Mage is trying to solve. I have experience with FiveTran, AWS Data Pipelines, AWS Step Functions, open source tools like Luigi and Airflow to orchestrate pipelines. Most of my recent and solid experience is with Airflow and so far it is going well.

Let's talk about Mage; I tested Mage locally and also used their demo to test out few of the functionalities that I was interested in, at first it looks great. Some components and features as discussed in earlier section that I was interested in were: Orchestrator, Integrations (Spark, DBT, Great Expectations), Testing, Setup, Scalability etc.

At the very high level; Mage has all the functionalities that other tools out their provide but in much easier and intuitive way. However, the usage depends mostly on the use case.

I think Mage as a combo of AirByte + Airflow + Jupyter Notebooks

I see Mage can be used in the following ways:

Companies using Mage to enhance and automate experimental workflows giving powers to data teams to accomplish their tasks efficiently.

Companies completely relying on Mage including production environment at smaller scale, as it's easy to setup and learn.

Companies using Mage for seemless integration with modern data stack (DBT, Snowflake, Great Expectations).

I think Mage is a perfect fit for new or smaller data teams who are looking to spin up things super quickly with lower technical burden. It also blends nicely into the modern data stack with its powerful integrations. However, with large scale data centric companies it might not be mature enough at the moment.

Let me share some thoughts based on my experience:

At my previous company KHealth, I would have opt out for Mage over Airflow because I had to setup the Airflow and custom DBT operator to run jobs on top of BigQuery (GCP) and it was some work especially being a lone Engineer in the team and hardest part was onboarding the Data Scientists and Analysts on those tools especially it requires technical understanding of how Airflow works in order to operate efficiently, with Mage it would have been much easier in terms of the learning curve and the usability as it combines everything in one nice interface.

Mage can really change the game for Data Scientists' and Analysts' workflows.

At my current company Socure, Mage cannot really replace 100% of our Airflow use cases, we have a platform that support spark (Scala & Python) based application which runs on top of Kubernetes. Spark based production workflows are not mature in Mage currently in my opinion. Second, we have lot of custom internal and external software integrations within the platform which would be very challenging to achieve with Mage.

However, one ideal case that Mage can help is automating the workflows of our Data Science Teams. We heavily rely on multi cloud Jupyter Notebooks for experimentation workflows which are not easy to integrate with Airflow. This is something Mage can help in running experiments on EMR using spark and also helping to orchestrate experimentation pipelines.

For large scale data companies Mage can be really a good supplemental tool alongside Airflow.

Conclusion

Mage is a great tool with great features, it will be some journey to reach at the level where everyone can really vouch for Mage instead of Airflow. Currently, I think it has lot of value and it really depends on the use case of the company, it can fit in the tech stack as a supplemental tools or a complete replacement of Airflow.

New and small scale data companies should consider Mage as most of these companies have lean teams and less technical expertise or bandwidth to deal with current technical tools like Airflow. You would need to design your modules and pipelines the way you like and would have to apply software engineering practices like modularity and testability, but in Mage everything is quite built in.

I am going to look further into Mage to understand more and hopefully going to share this in our company tech talk if this could be a right fit for the data teams.

Feedback for Mage team:

Keep up the good work, I do think there is lot of value in using Mage which will increase as it matures.