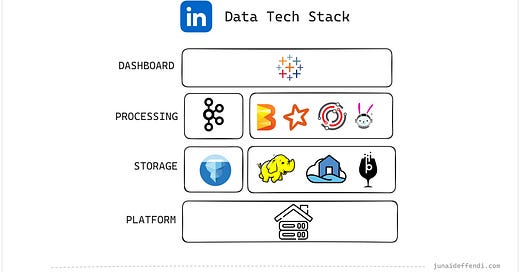

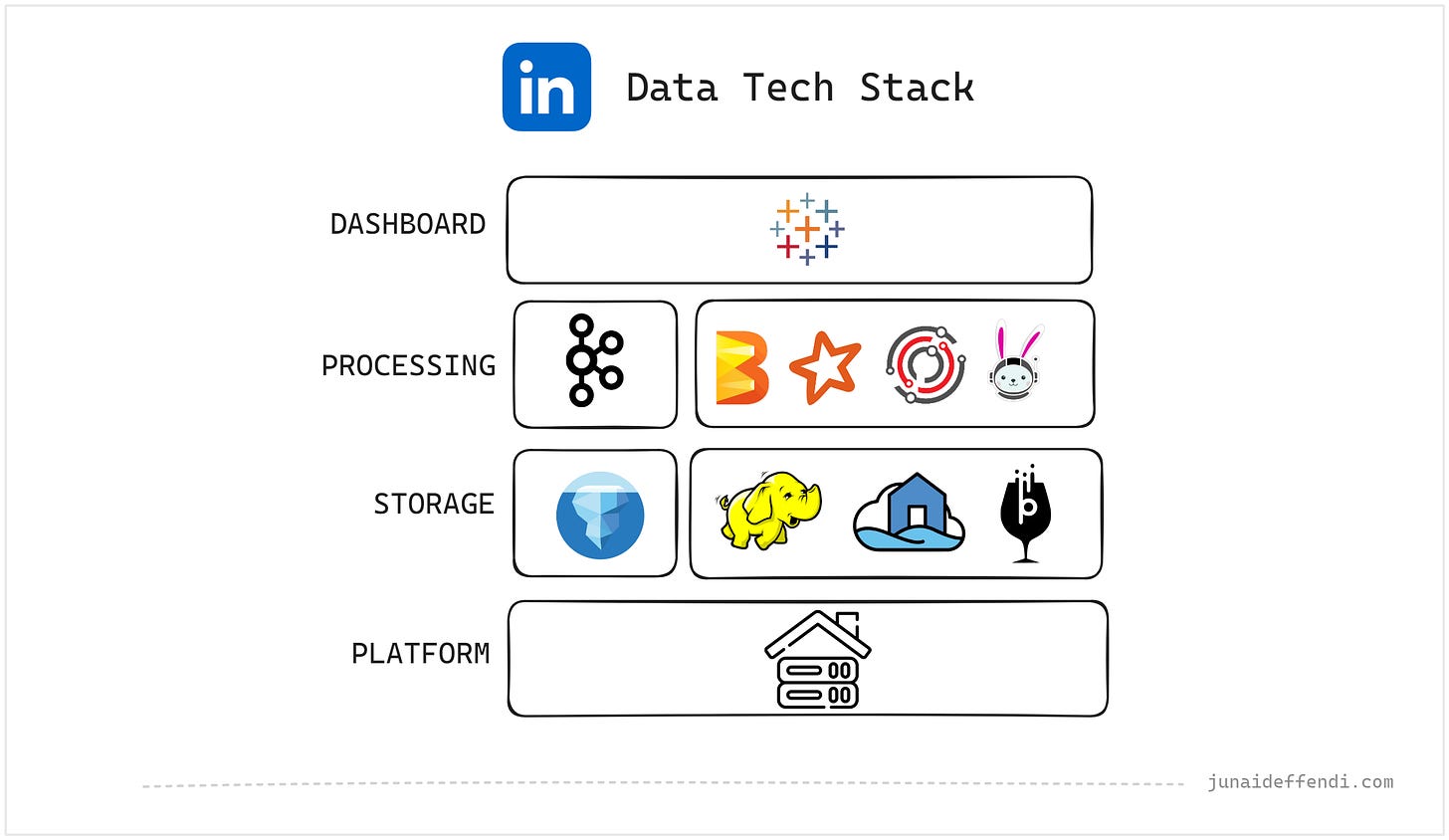

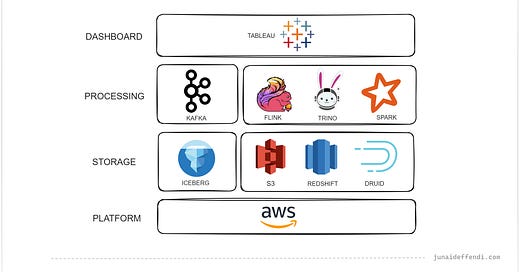

LinkedIn Data Tech Stack

Learn how LinkedIn handle trillions of events per day from billion customers using Apache Beam and more.

LinkedIn is a leading tech company leveraging advanced tools for large-scale data processing. Over the years, their engineering teams have made significant contributions by open-sourcing several technologies, including well-known ones like Kafka, Pinot and Samza. Today, we'll explore their data tech stack and get an overview of how they handle massive data processing.

Content is based on multiple sources including LinkedIn Blog, Open Source websites and news articles, you will find links as you read through the article.

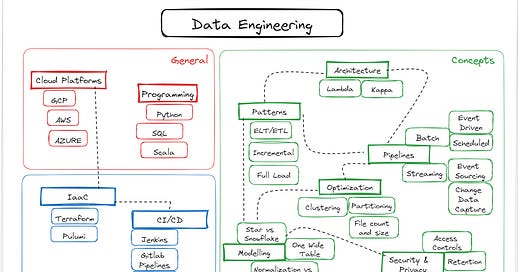

Platform

On Premise

LinkedIn have been maintaining their own data centers since its inception, they have data centers across the US and one in Singapore.

LinkedIn initial plan after getting acquired by Microsoft was to move to Azure, however in early 2022 the plan was paused as per this source. Since they paused the migration after few years, it is likely that some services are already in Azure.

Storage

HDFS

HDFS has been a core part of LinkedIn Data Infrastructure containing petabytes of data running on the Hadoop Clusters. LinkedIn have built lot of open source abstractions to deal with massive scale challenges, read their article about storage infrastructure.

Iceberg

LinkedIn use Iceberg open table format to provide ACID capabilities to the Data Lake along with lot of other benefits, time travel and data compaction.

OpenHouse

OpenHouse is another recent open source initiative by LinkedIn data infra teams. It is a control plane for managing Iceberg tables. It includes a RESTful declarative catalog, and suite of data services for table maintenance.

Pinot

For Low latency real time analytical purpose, LinkedIn use Pinot which was actually created at LinkedIn and later became part of Apache.

Processing

Kafka

Another popular and successful tool built at LinkedIn. LinkedIn leverage Kafka to process trillions of events every day.

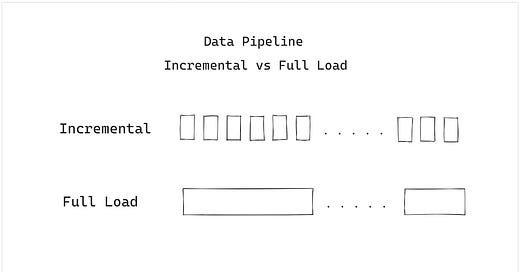

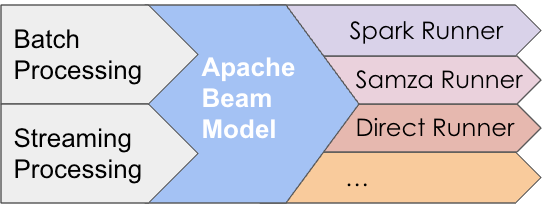

Beam

LinkedIn adopted Apache Beam in 2023 to unify their batch and streaming needs. Beam allows easy integrations with distributed engines like Spark, Samza and Flink.

📖 Read article: Revolutionizing Real-Time Streaming Processing

Samza

Samza was created at LinkedIn and open source in 2013. It has been used in streaming workflows, while more recently its used in conjunction with Apache Beam Model as shared above.

Spark

LinkedIn use Spark for batch processing workloads that deals with petabyte scale. LinkedIn has customized Spark shuffling service through in house tool called Magnet.

Trino

Trino is used to query data sitting in HDFS using SQL. It is used for both purposes; performing quick adhoc analysis and running in a batch pipeline.

Dashboard

Tableau

LinkedIn use Tableau to empower their analytics and sales team.

Considering their wide range of in house/open source tools, they may have a dashboard data tool along with Tableau.

📖Recommended Reading: LinkedIn Data Infrastructure

Related Content:

💬LinkedIn is pretty big and most likely using lot of other technologies that I could not mention. If you think I missed important ones, feel free to comment below.