Introducing Pooling in Convolutional Neural Networks

Inthe previous tutorial, we started with Convolutional Neural Networks, in this Iwill share the concept of pooling and how it works to improve the system ofthe CNN. Also this will include the upgraded version of previous python code with maximum pooling function.

First of all we used stride for spatial reduction, but this reduction ignores a lot of useful information in the patch. To overcome this, a concept known as pooling was introduced. There are two types of pooling, average or maximum, both working a very simple manner as suggested by their name, one does the average of the neighboring pixels, while other takes the maximum.

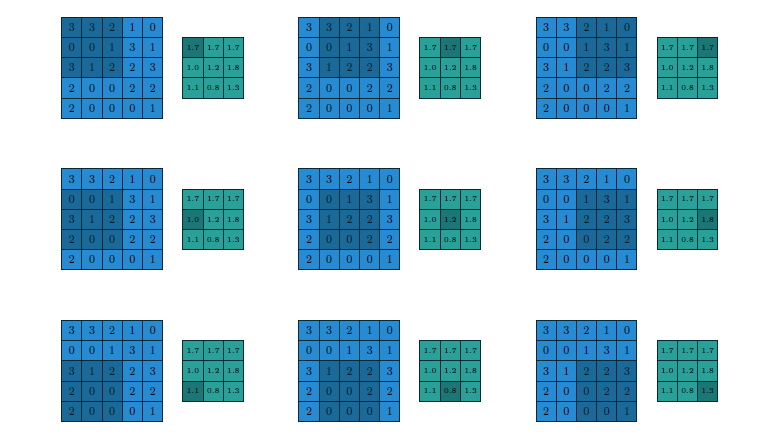

Below is the figure showing how average pooling works.

The above image is 5x5 pixels, and a patch here is 3x3, with stride value equals to1. You can see how moving the patch converts it into a small sized output.

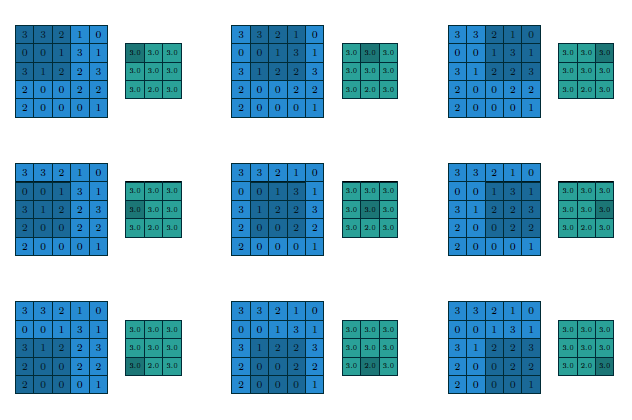

Below is the maximum pooling, you can easily see how it is generating the output.

Furthermore, there are two more complicated concepts 1x1 convolution and inception modules that you would like to explore. Also there are two types of padding, valid and same padding.

To learn more about convolutional neural network I would recommend you to download the ultimate guide, it also shows the advanced mathematical modeling of CNN.

def model(data):

conv = tf.nn.conv2d(data, layer1_weights, [1, 1, 1, 1], padding='SAME')

hidden = tf.nn.relu(conv + layer1_biases)

hidden = tf.nn.max_pool(hidden,[1, 2, 2, 1],[1, 2, 2, 1], padding='SAME')

conv = tf.nn.conv2d(hidden, layer2_weights, [1, 1, 1, 1], padding='SAME')

hidden = tf.nn.relu(conv + layer2_biases)

hidden = tf.nn.max_pool(hidden,[1, 2, 2, 1],[1, 2, 2, 1], padding='SAME')

shape = hidden.get_shape().as_list()

reshape = tf.reshape(hidden, [shape[0], shape[1] * shape[2] * shape[3]])

hidden = tf.nn.relu(tf.matmul(reshape, layer3_weights) + layer3_biases)

return tf.matmul(hidden, layer4_weights) + layer4_biasesGet Full Code

Here you can see that we have decreased the stride size for the conv2d because we want no information to be ignored, while in the max_pool function we have that 2x2 stride which reduces the feature map size (spatial reduction).You can also try it with average pooling.

You might see a slight increase in the accuracy after pooling but that is not our target here. One more thing to add is that removing the ReLu from the convolutional layers would also increase the accuracy is this scenario. I had an increase from 90.8 to 92.2% when I removed the two ReLu functions.