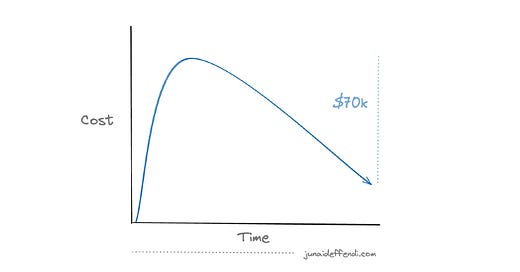

How I saved $70k a month in BigQuery

Learn the simple yet powerful optimization techniques that helped me reduce BigQuery spend by $70,000 a month.

When data engineering projects scale, optimization comes into play and becomes a challenging thing to solve. In this article, I will focus on the cost optimization part of one of the Data Engineering Pipeline that I worked as a sole Engineer.

💡For projects that are expected to scale in near future, cost should always be kept in mind from the initial design phases and could be implemented later.

Background

A little background, it was GCP hosted data pipelines leveraging Dataflow, BigQuery, Cloud Composer (Airflow), DBT for doing ELT on the data coming from streaming source PubSub. Read more about Event Driven Data Engineering.

There were couple of pipelines, some legacy, and some newly built by myself, e.g. one pipeline was producing transformed data in under 15 minutes for the end users to unlock some business use cases, we decided to revisit the cost later as development speed was preferred.

Some common items that helped me with the cost optimization:

Monitoring: I put together a cost monitoring dashboard with alerting right after we wrapped up the development of pipelines. This allowed us to see the cost patterns overtime.

Audit Logs: These logs were used to find the common theme around queries that I needed to find where the high spend is coming from.

Optimization Techniques

Following are the steps that I took to reduce the cost while some of the techniques improved performance as well:

Revisiting the Pipeline Schedules

Revisiting the actual requirement was a key, most of the time by default, the requirements are lets keep it in sync with the source without thinking of the cost. We started of with low latency 15 minutes pipeline but we quickly moved off the schedule as it was not really a clear use case for the business.

I split the pipeline based on load and updated the schedule.

Pipelines that were compute heavy were moved to daily schedule.

Pipelines that were light weight were moved to hourly than later to 4 hour schedule.

This was one of the biggest cost saving reason, we never needed that kind of latency for the end users or dashboards.

💡In my experience, real time or near real time genuine use case is very rare, most batch processes works fine.

Optimizing the Tables

Since when I built the pipeline, the data size was too small and I intentionally kept the optimization for later when we started to receive more. After several months the cost started to increase because table size grew close to a billion rows, and lot of queries were not able to scan data efficiently.

Two action items which I performed:

Flatten Data Model: Since data was coming from an event sourcing system, we were receiving a big blob of JSON and we kept as is initially, but after analyzing the downstream usage, we found out redundancies. It gave us the opportunity to produce a new flattened data model for highly consumed nested fields.

Partitioning & Clustering: I followed the best practices provided by BigQuery docs to implement column partitioning and clustering, for example high cardinality columns that were heavily used in filter were made part of clustering like `user_id` and low cardinality columns like `date` was added as partition. This speed up the queries.

Migrating Pipelines to Incremental

Some of the legacy pipelines were doing a full load, we moved to incremental approach. I kept the increments in sync with pipeline schedule with an overlap to handle late arriving data, while the duplicates were handled via the `UpSerts`.

Leveraging the Materialized Views

Audit logs helped in identifying the common adhoc queries among several users, I took the opportunity to move them into central pipeline and create pre computed views on top so users can access data quickly while we save cost.

💡Views are great abstractions on top of the table, makes it easy to change without touching the core table.

Precomputing Dashboard Queries

We were using Looker and most of the calculations were On Demand, we moved some of the high compute and non critical dashboards queries and built new pipelines to pre compute data which improved the dashboard performance. Users were fine with a bit stale results but it was quicker and cost efficient.

Training the Users

Lot of Data Analysts and Scientists were querying the tables and few things that I noticed after leveraging the audit logs were, each and every query had a room for improvement from the user side, e.g. avoiding the `select *`, using filters properly, limiting results, running and testing on subsets, leveraging temp tables.

Setting up time to train and share best practices with the team created an opportunity for everyone to participate in the cost saving journey.

Table optimization, incremental pipelines and materialized views steps not only saved cost, but also improved the performance making end users happy.

Conclusion

These are very simple and considered best practices, most of the time they should be part of the design document of pipelines, in case you are working in fast paced environment and you decided to revisit later, thats fine too.

These techniques can be applied to most of the data warehouses in the market today.

Automated monitoring and alerting makes life easier, I would encourage to have this from the start.

If you enjoyed this, you may enjoy a similar content by

→ Optimizing Your Data Infrastructure Costs .

I wish I had this article 2.5 years ago when I first started working with BigQuery. It would've prevented me from learning things the hard way and would've saved the company tens of thousands of $$ 🙄😅

Thank you so much for the article :) I was just curious when you said: "most of the calculations were On Demand", so why did you decide to use the on-demand model most of the time? Is there any obstacle with the BigQuery enterprise pricing model for your use cases?