Designing GenAI Chatbot for Business Intelligence

Sharing few approaches from experience working on GenAI chatbot leveraging reporting tool as the data source.

I have been working on GenAI chatbot to answer different types of business metrics questions. The goal is to make it accessible for everyone to find information instead of digging into dashboards.

The approach leverages reporting tool (Tableau) instead of data warehouse to answer the questions:

Tableau is what users look at today

Tableau has the required filtered and aggregated data

Tableau can provide dashboard images and links in the response

⭐ Applicable to reporting tools that has an api interface.

There are couple of options to consider:

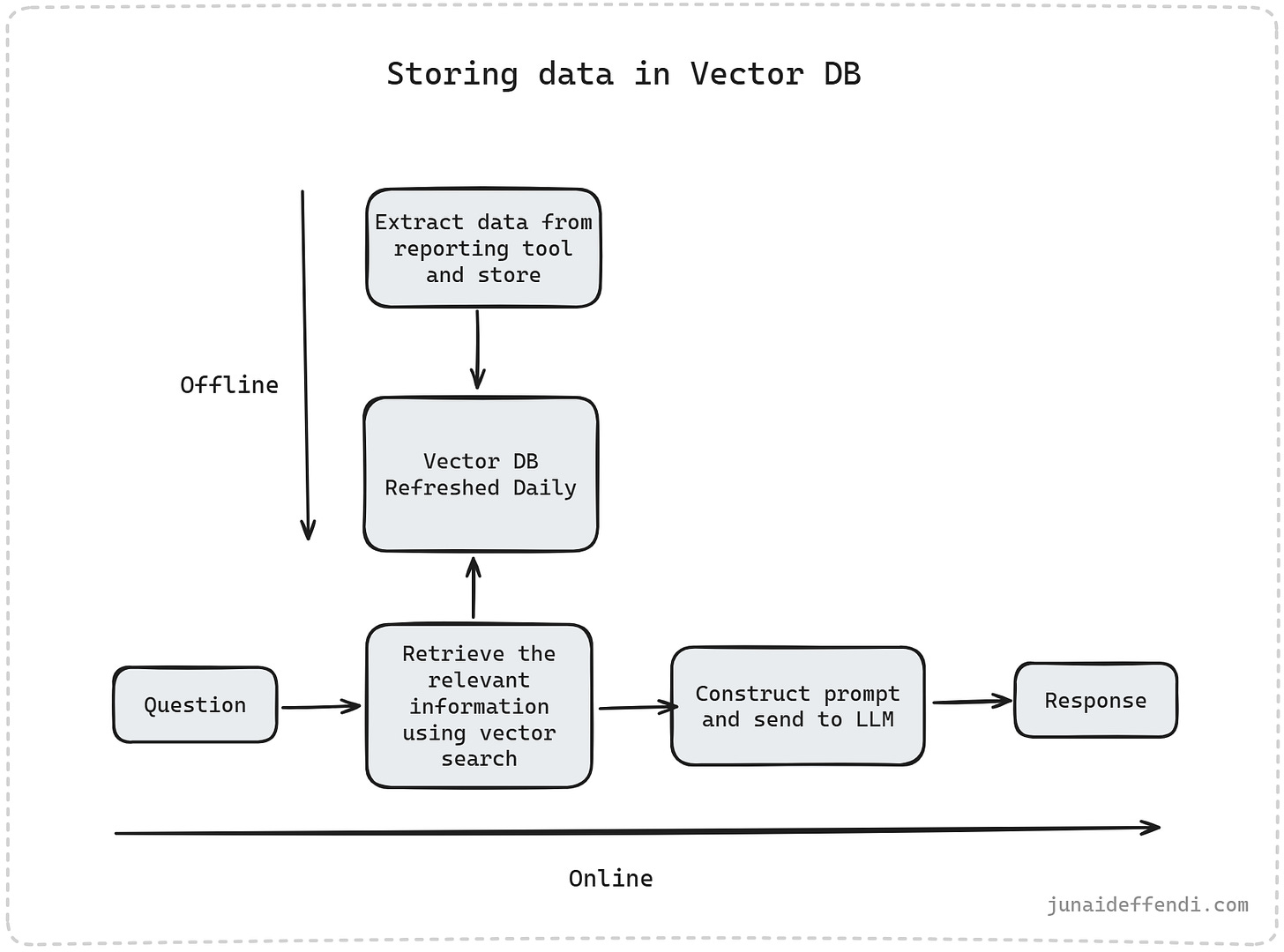

Storing data in Vector DB

This is also a static option as it builds Vector DB on periodic bases e.g. daily.

This option leverages the Vector DB by storing the information directly through an offline process.

When a question is submitted, it first find the relevant information from Vector DB then creates a prompt for LLM which then generates a response.

LLM might not produce accurate results depending on the quality of input data provided by the reporting tool.

💡We tried this approach, but the accuracy was suboptimal, primarily due to limitations of the Tableau API for VIEWS. It returns data as images, and the CSV option is limited to a few basic scenarios, leading to poor quality.

Building external data repository

This approach relies on LLM during both offline and online phases.

This is also a static option, updates on periodic basis e.g. daily.

During the offline process, it extracts data from api and store them in the repository while also using it to generate description through LLM which is stored in Vector DB.

When a question is submitted, it retrieves the list of relevant documents from Vector DB and submits those to LLM for a response.

This approach is simple yet powerful but could have limitations, e.g. the number of documents that can be submitted to LLM as part of prompt.

This approach requires exhaustive offline data extraction process to cover all scenarios, like get all data from all dashboards with all combination of filters.

💡We are currently using this approach which has been easiest with better accuracy although limited in terms of data coverage, as we extracted images just using default VIEWS.

We also configured it to return the Dashboard Image and Link in the response.

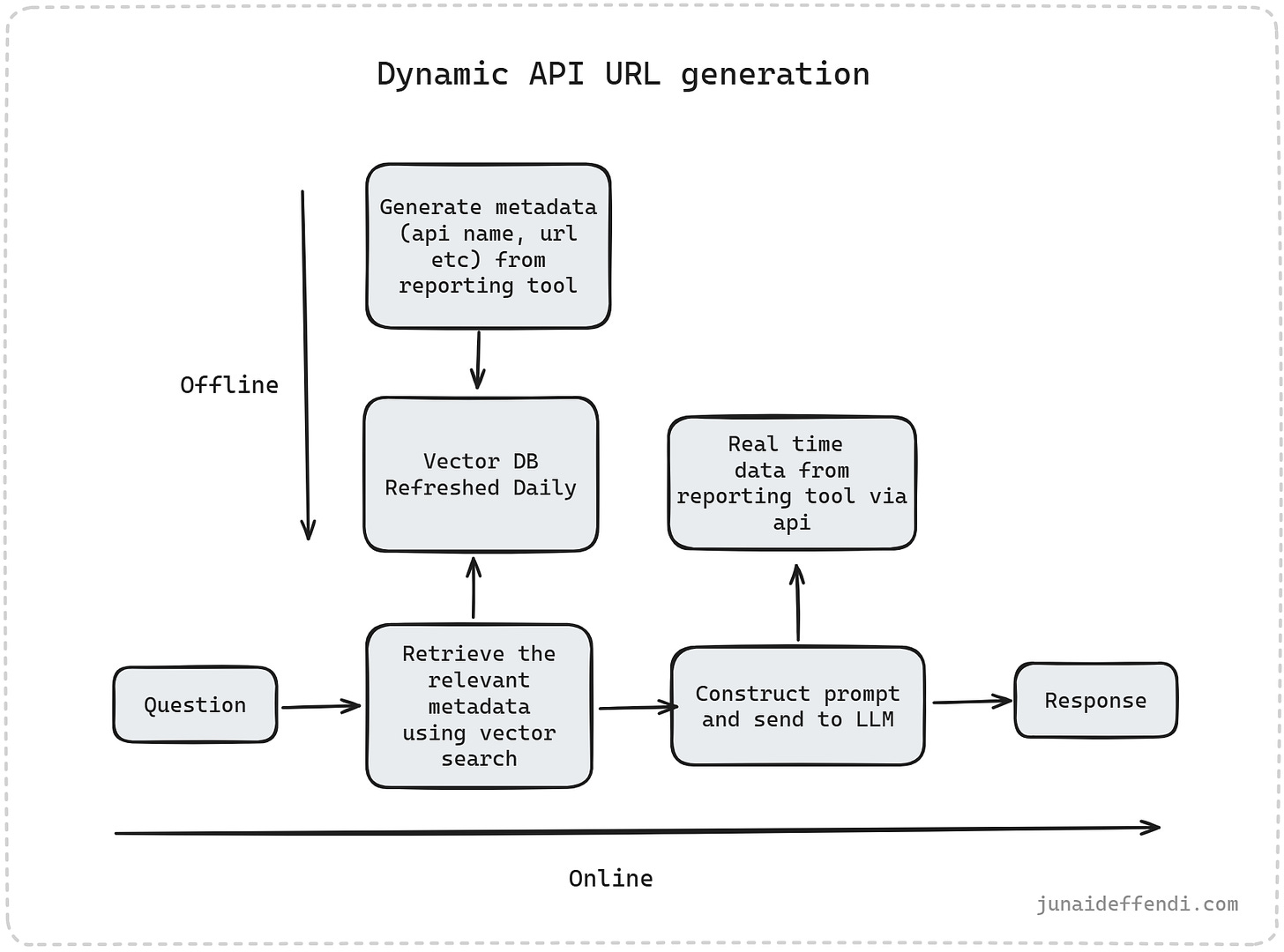

Dynamic API URL generation

This is real time approach which requires more advanced techniques.

First we build a RAG with all the relevant metadata information like existing API paths, endpoints and description in an offline process.

When a question is submitted, first it retrieves the metadata information to construct a prompt for LLM, e.g. a fully qualified url with parameters which is then used to fetch the data from reporting tool server.

Unlike the above two approaches, the offline process is much lightweight and simple due to its real time data extraction capability.

URL generated should be valid otherwise it would error out on the client side.

Since it requires real time client-server interaction, it could lead to network errors.

💡 We are yet to dive deep into this approach. It is complex considering error handling, api url generation accuracy, etc.

Real time is not required for this business metrics use case as the underlying data refreshes once a day.

However, if this works, it will improve data coverage and solve other limitations shared earlier.

⭐ We used latest Claude model by Anthropic via AWS Bedrock.

If you like to avoid the reporting tool route then other common option is to leverage data warehouse which will have similar options but involves more work e.g. access restriction, data preparation, metadata extraction etc. Data warehouse might be good for other use cases.

For dynamic SQL generation approach for the data warehouse, checkout Vanna AI.

💬 We are still very early and learning new things everyday, if you have suggestion or feedback please comment below.