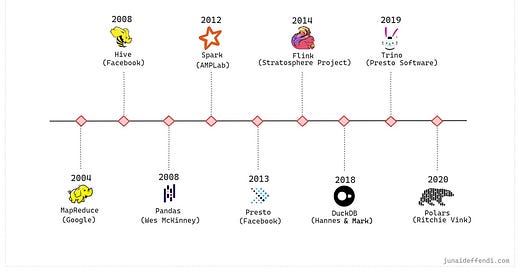

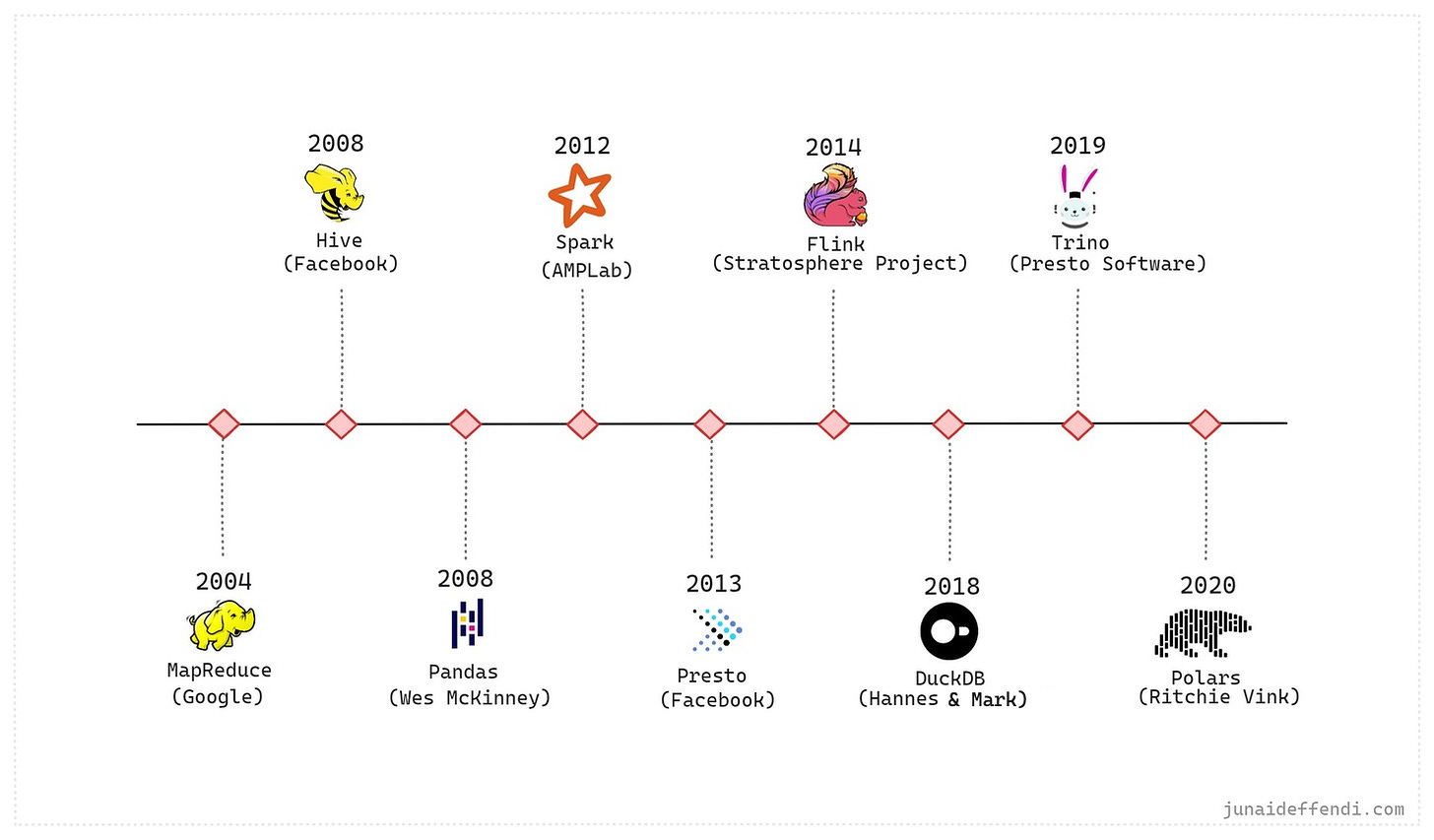

Data Processing in 21st Century

Timeline of Data Processing technologies covering from MapReduce to Polars.

Lets talk about the open source data processing technology from the 21st century, covering distributed frameworks to single node libraries, mature and recent development.

Before these technologies, the common technique to process data was to glue together custom solutions, e.g. scripting and piping was one approach as mentioned in the Designing Data-Intensive Applications.

Now, we have lot of tools on our plate, lets discuss briefly each tool, what they solved, and how they evolved. Some of the tools were part of Hadoop Ecosystem, some are direct replacement of another, while some are complimentary to each other.

If I missed your favourite one, please let me know in the comments.

MapReduce

MapReduce was the first tool that build the foundation and changed the way we worked, it simplified lot of stuff that was done manually when processing large amounts of data.

MapReduce was later open sourced and became an integral part of Hadoop ecosystem.

As of 2014, Google the original developer of MapReduce were not using it anymore.

Hive

Hive was also first of its kind that allowed end users to run SQL like queries (HiveQL) on data stored in a file system e.g. HDFS. Before Hive, MapReduce was the only option requiring a lower level API like Java to interact with HDFS.

This was another game changer that helped Hadoop grow further.

Pandas

Pandas simplified data processing by introducing easy to use library and bringing the concept of DataFrames to Python world.

It mainly focused on processing data that can fit on single machine, for big data one has to either built custom solution or use the other mentioned distributed frameworks.

It has played a great role so far in helping lot of Data Teams in doing data manipulation from Data Engineers to Data Scientists.

Spark

Spark came as an upgrade of MapReduce by solving challenges that MapReduce faced, improving processing by leveraging in memory computation.

Spark is a popular choice when dealing with massive amount of data, leveraged by many companies of different sizes e.g. Netflix, AirBnb, Socure.

Interested in Spark? Checkout my Spark Article Collection.

Presto

Presto took the concept of querying files using SQL to next level by building a better architecture and leveraging the in memory capability, replacing Hive.

Presto is still used heavily in some form today, e.g. AWS Athena uses Presto as Query Engine under the hood.

Flink

Flink was developed mainly to solve real time processing needs which was mainly ignored by the other tools.

Real time and stream processing gained popularity in the last decade and many companies like LinkedIn, Uber now leverages Flink to process data.

Learn where Flink sits in the Pipeline Architecture.

DuckDB

DuckDB is powerful single node analytical database with enhanced SQL capabilities.

It has gained popularity recently, mainly due to ease of setup and quicker to process smaller to medium sized data on single node.

It is a good alternate to Pandas, teams that are equipped with SQL can easily consider this.

Trino

Trino is basically a fork of Presto, so similar powers with additional features on top like enhanced SQL support, better performance optimizations, and new connectors.

It has been widely adopted recently by many tech companies including Netflix, Stripe etc.

Polars

Python library written in Rust, came out recently in 2020 to solve challenges faced by Pandas in terms of performance.

It is gaining popularity, however it is still new but the future looks bright.

Lot of comparison has been done between the three single node technologies Pandas vs Polars vs DuckDB.

💡I always emphasize to learn the technologies atleast from the recent past, this is a great way to learn about tech evolution which helps in future innovation.

The pattern seems to be like we are partially moving back to single node; single node → distributed → single node. E.g. DuckDB and Polars have been gaining popularity recently. This has become possible after seeing the massive memory that one can get e.g. AWS can provide 24 TB instance.

For further related reading, check out this Brief History Article, it covers related technologies that influenced the above ones.

Interesting read!