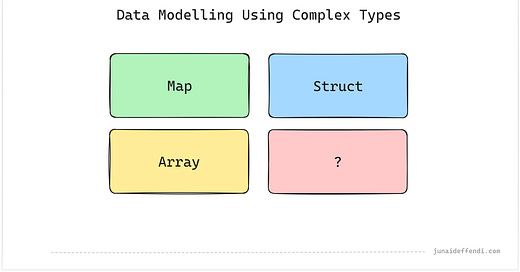

Data Modelling Using Complex Data Types

Complex data types like struct, array, map in modern warehouses are game changer, learn the useful aspects from a Data Engineer.

Today’s powerful Data Warehouses / Lakehouses provide tremendous useful features from time travel, acid properties to complex data types. With the recent rise of One Big Table data model, the complex types are gaining popularity as it makes easy to implement and maintain the data model.

Today, lets dive how One Big Table and Complex Data Types work in real world.

⭐ I have worked mostly on OBT Data Models for handling tens of Terrabytes and multi billion rows of data containing highly nested columns.

One Big Table

OBT is a data model that follows a denormalized approach, as the name suggest all data goes in one table, it can be either a flatten wide table or a nested table leveraging complex types. OBT and complex types goes well together, however complex types can also be used in other models.

Complex Types

Complex Data Types are nested types like Map, Array, Struct in Spark. Today, I will share from Spark perspective. There are many ways to store complex data in Spark, lets look into each one of them:

Map

A Map Type in Spark is like a Dict in Python or a HashMap in Java. Map is a strictly typed structure which requires the data type of the key value pair to be of a specific type across the column.

Map Column of type <String, String> named map_col:

{

key: value

}

{

"fname": "junaid"

}

-- querying a map

SELECT <column>.<key> FROM <TABLE>;

SELECT map_col.fname FROM <TABLE>;Struct

Struct is another popular complex data type that can store a strictly defined schema and types in one column.

Struct Column of schema/type <fname: String, lname: String> named struct_col:

{

"fname": "junaid"

"lname": "effendi"

}

-- querying a struct

SELECT <column>.<field> FROM <TABLE>

SELECT struct_col.fname FROM <TABLE>;Array

Array allows you to store multiple values within one cell of a table, saving space and number of rows in the table.

Array Column of type <String> named array_col:

[

"A",

"B"

]

-- exploding array into multiple rows

SELECT explode(<COLUMN>) FROM <TABLE>

SELECT explode(array_col) FROM <TABLE>JSON as String

Storing a JSON as string allows you to still query it while not worrying about the datatype or schema.

JSON Blob Column of type <String> named json_col:

'{

"fname": "junaid"

"lname": "effendi"

}'

-- extracting a key from json string column

SELECT <COLUMN>:<KEY> FROM <TABLE>

SELECT json_col:fname FROM <TABLE>Variant

Variant itself is not a Complex Data Type, but it converts the above types into semi structured format. Variant will be available in Spark 4.0, its currently in Public Release in Databricks and has been available in Snowflake.

JSON Blob Column of type <Variant> named variant_col:

parse_json(

'{

"fname": "junaid"

"lname": "effendi"

}'

)

-- extracting a key from variant column

SELECT <COLUMN>:<KEY> FROM <TABLE>

SELECT variant_col:fname FROM <TABLE>Querying Variant is similar to querying a JSON string, but its more performant and recommended.

Key Points

OBT is faster in terms of computation. Since all data is in one place there is no need to join hence no shuffle. However, OBT takes lot of storage space, but as we know

Storage is Cheaper than Compute.OBT is easier to maintain e.g. backfilling and governance.

OBT used with flatten approach may end up with hundreds or even thousands of columns:

Users may find it hard to follow.

Performance would be great as storage format is columnar.

OBT works well at the upstream level, downstream users may still create their own tables on top of it as per their needs.

Structured Data Types have benefits over Semi Structured Data Types:

Storage space savings as the data type is known. E.g. Date and Timestamp.

Faster queries as the key names are known. E.g. Struct. Query Optimizer will perform better.

Semi Structured Data Types comparison over Structured Data Types:

Provides more flexibility as Schema or Data Type is not required.

Degrades in performance due to unknown schema or types.

Map with

<String, String>type can be considered flexible as it can store basic types like Numeric as string. It auto converts them to string but this is not possible the other way around.Storing JSON blob as String is less performant than Storing it as Variant as JSON String require type conversion on read.

Struct fields are treated as columns, meaning same optimization and read performance, and support of schema evolution.

Comparison operations are not possible on Complex Data Types, e.g.

distinctdoes not work on Map.Delta Lake cannot compute statistics on Map and Array Types.

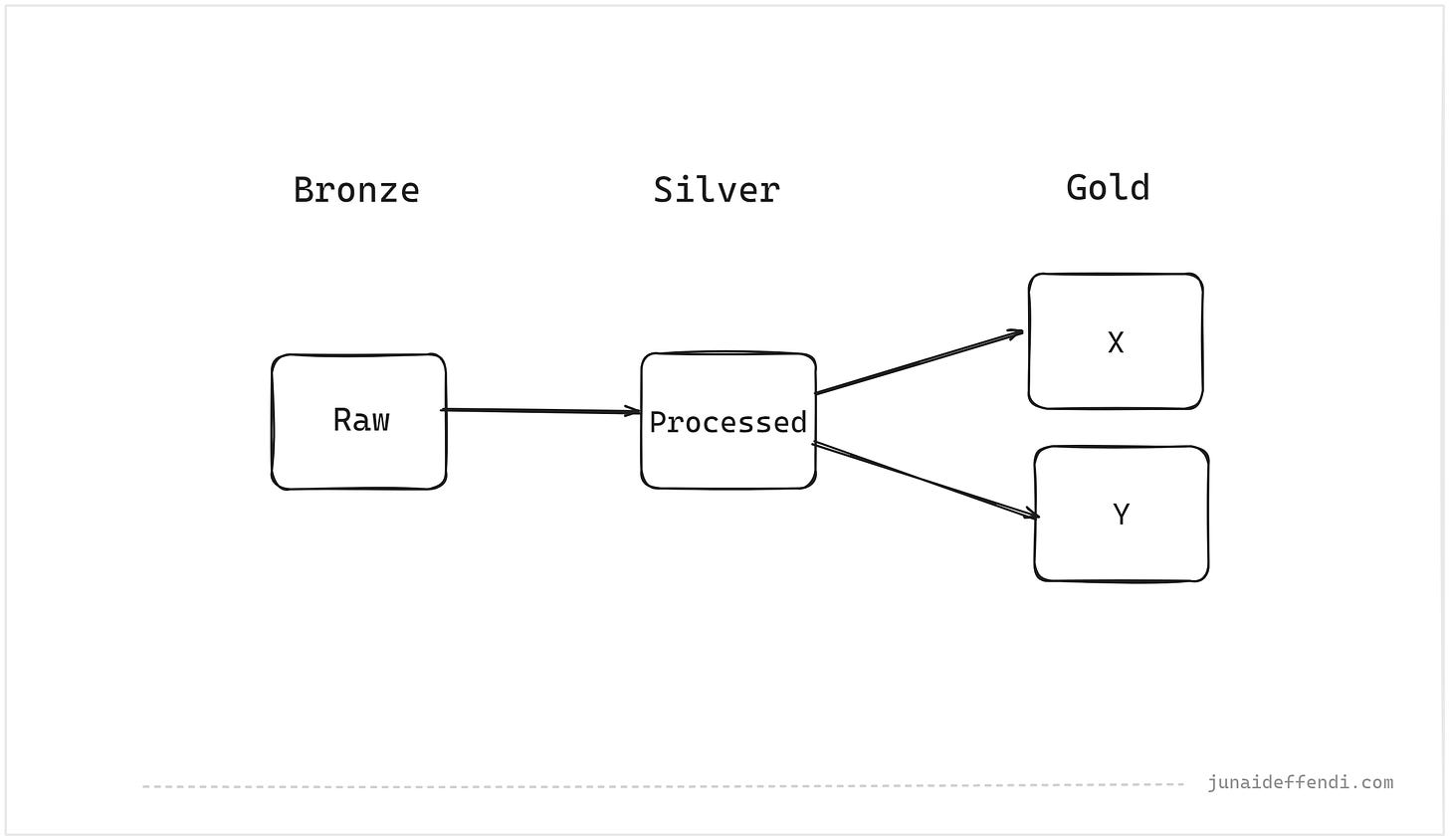

Real World Design

This is a pipeline design that I have been using today where I was responsible for Raw and Silver layers:

Raw table stores the source data as is in a String JSON format. (Variant not available as of now)

Storing in raw as is makes it easy to backfill and to refer data between raw and processed.

JSON over Structured Types mainly due to highly variable source data, this gives flexibility. Performance may degrade at the expense of easy to maintain, no schema evolution or schema registry required.

Processed table splits JSON into certain categories leveraging proper complex types like Map, Array and Struct.

Nested JSON blobs that rarely change can be split into separate columns in structured types. This makes querying easy and performant.

Array Types are not exploded into multiple rows as the downstream users can query subset and perform explode on their end directly, this is still performant as its in-memory computation, saves storage and additional data transfer over network. For bigger use case it might be worth to consider a Pre Exploded Table.

X and Y tables created by end users for their use case. E.g:

Pre Exploded Subset

Pre Aggregated Subset

ETC

💡Spark 4.0 will release cool features including the Variant Type, great news for Spark Fans.

💬 Let me know in the comments what would you consider when using the OBT model with Complex Data Types.

Talking about Spark: Worth to visit my Spark Series from the Past:

Thanks for the shoutout, Junaid.

Very good article friend! The other day I had a lot of headaches processing data like Struct in Spark, but I learned a lot!