Back a few years when I started as a Data Engineer, batch processing was on top and popular across the industry, but in the recent years companies especially (B2C) have started to go towards Event Driven approach as it provides the opportunity to analyze data in real time and make key decisions based on the users behavior.

As a Data Engineer, event driven ETL comes with its own challenges and benefits as well. With data size growing exponentially, data engineers are looking for better ways to ingest data in real time and especially in an event driven fashion which comes with a lot of benefits.

This article will walk through a complete end to end event driven solution from ingesting data in real time using streaming technologies to modeling the event data for visualization and reporting. I will split this article into two parts, first the architecture and second the data modelling.

At my KHealth job, we use Google Cloud Platform, which is a super easy, fully managed platform, comes with a lot of benefits and tools, so the architecture that I will share will be based on GCP.

Event driven systems work as a source of truth for data, easy to standardize across different applications and thus easy to consume/subscribe as well.

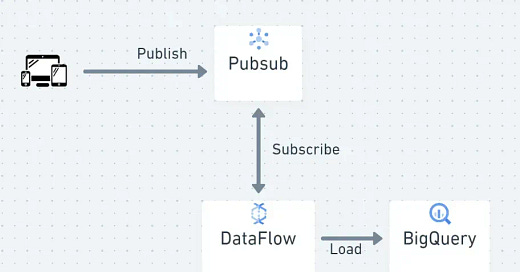

A simple Architecture diagram in GCP may look like the one as shown on top.

Event Driven Data Architecture

Tracking users' behaviour on the mobile or web app is now much easier, thanks to an event driven approach where you send data directly to messaging services like Kafka or PubSub and process it in real time using Apache Beam.

PubSub

Pub Sub aka Publishing and Subscribing is a messaging tool in the GCP, again fully managed service. A shared PubSub architecture across the company can help maintaining and understanding the flow much easier.

A custom wrapper can easily be built using the SDK and shared across teams so they can easily use PubSub. Microservice architecture is suited well for this scenario where one can create a subscriber as a microservice and subscribe to a specific topic and move into the required destination. DataFlow is an example of the managed microservice.

DataFlow

GCP has DataFlow which is based on Apache Beam, its main purpose is to transform data in real time and load data to a destination, in our case we dump it into a data warehouse BigQuery.

Since GCP is fully managed, it is super easy to setup a PubSub to DataFlow to BigQuery pipeline.

BigQuery

BigQuery is a columnar data warehouse and is one of the most powerful data processing tools in the market, it has recently gained popularity as it makes engineers, analysts, managers life easier, no infrastructure to maintain, automatically scales and optimizes the queries. We will talk about it more in the next section of the article.

Event Driven Data Modelling

The event driven data modelling is quite different, as one of the goal as an example is to keep full track of users behavior/journeys across the app, from paid marketing campaigns to their last event within the app.

Modelling a super nested event data is easier than the relational tables because cleaning duplicate data, joining multiple tables and extracting JSON into separate fields are not required to that extent plus few denormalized tables can get the job done. Unifying the event data is one good approach to solve lot of problems especially with BigQuery being so powerful with the Nested/Repeated structures. GCP itself follows/recommends this approach, for example moving audit logs (JSON) into BigQuery using a sink functionality will keep the table super nested.

BigQuery comes with a DataType Struct which is a key value data structure just like a Dict in Python or a JSON and it automatically detects and holds the nested data. One of the biggest advantages of this modelling is that schema management becomes easier, because changes are usually made to events which are nested structures that can be reflected seamlessly. Imagine how easy it would be to add, update or remove a new key value or a new event.

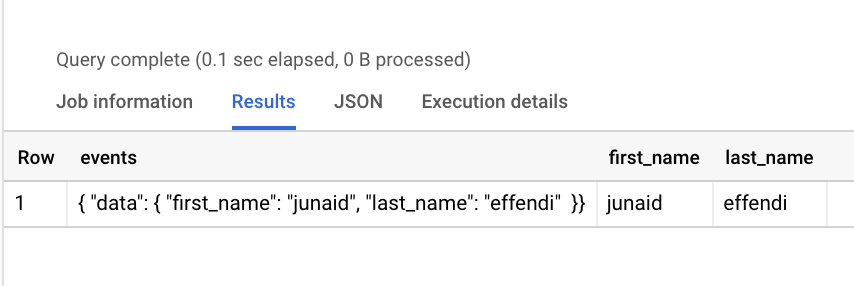

Some BigQuery JSON/struct functions, If you have a string JSON:

SELECT JSON_EXTRACT_SCALAR(<column>, ‘$.key.key’) FROM <table>A JSON column events having '{ 'data': { 'first_name': 'junaid', 'last_name': 'effendi' }}' can be extracted like:

WITH events AS (

SELECT

'{ "data": { "first_name": "junaid", "last_name": "effendi" }}' as events_param

)

SELECT

events_param

, JSON_EXTRACT_SCALAR(events_param, '$.data.first_name') as first_name

, JSON_EXTRACT_SCALAR(events_param, '$.data.last_name') as last_name

FROM eventsIf you have a proper struct which is more efficient as its a native data structure.

SELECT STRING_AGG(DISTINCT value) FROM UNNEST(event_params) WHERE key = ’name’)

SELECT STRING_AGG(DISTINCT value) FROM UNNEST(event_params) WHERE key = ’name’)

WITH events AS (

SELECT

[

STRUCT<key string, value string>

('first_name', 'junaid')

,('last_name', 'effendi')

] AS event_params

)

SELECT

event_params

, (SELECT STRING_AGG(DISTINCT value) FROM UNNEST(event_params) WHERE key = 'first_name') AS first_name

, (SELECT STRING_AGG(DISTINCT value) FROM UNNEST(event_params) WHERE key = 'last_name') AS last_name

FROM eventsNested Structure works well for base/ingestion layer tables, for datamarts and other common scenarios it is still better to flatten out so you don't have to go back and forth with the same JSON extraction.

This was the overview of how event driven systems can be a really good option especially in GCP. Although there is one problem with the nested structure is the data discovery becomes difficult, the current GCP data discovery tool Data Catalog works well on a relational unnested table but with nested, one does not know what is actually inside the nested structure, if someone asks what keys are there in this JSON column, then Data Catalog has no answer. You have to query to find the answer which can sometimes be costly.