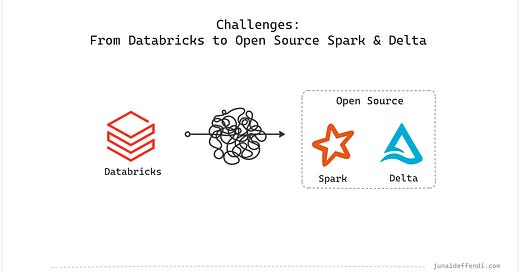

Challenges: From Databricks to Open Source Spark & Delta

Sharing the challenges to save hours when doing migration from Databricks to Open Source.

Databricks is a powerful platform that helps you deploy Spark jobs easily and quickly, however it’s expensive especially in case of streaming job and you may rethink of an alternate solution.

In this article, I will focus on the following challenges I faced when moving our streaming jobs from Databricks to open source Spark and Delta while keeping Databricks as a querying platform for the unmanaged Delta tables.

Kinesis Connector

Delta Features

Spark & Delta Compatibility

Vacuum Job

Spark Optimization

⭐If you are interested in learning how to deploy streaming Spark job with Delta on Kubernetes, check out this article:

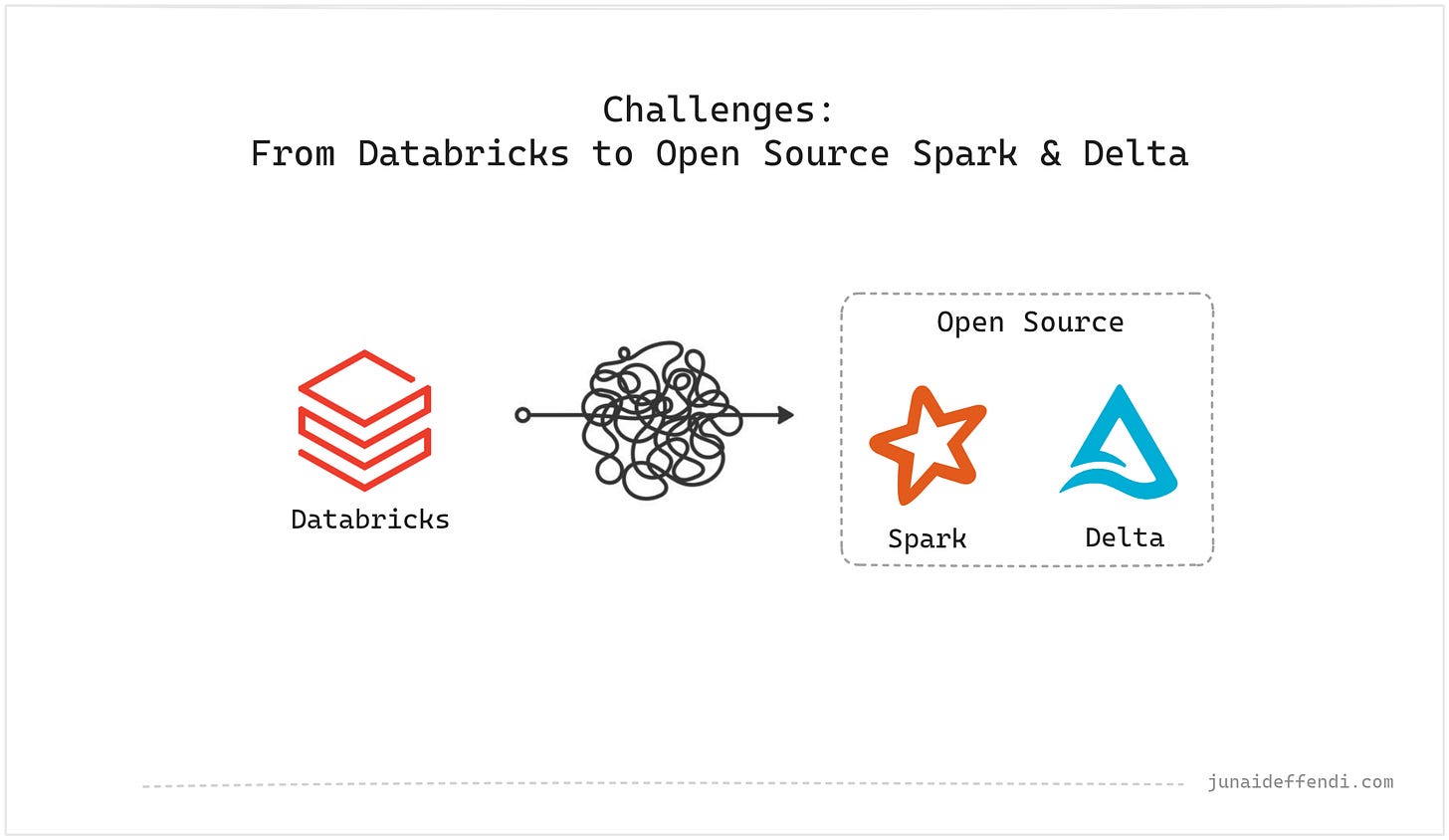

Architecture

Challenges

Lets dive into the key challenges and differences:

Kinesis Connector

Databricks provide a Kinesis Connector with numerous configuration options making it user friendly. Transitioning to open source means finding the right Kinesis connector like AWS Kinesis connector. However, the limited options of Kinesis connector for Spark often lead to trade-offs. One key example is shards per task configuration.

💡This may be true for other connectors like Kafka.

Delta Features

Delta features are released in Databricks first compared to open source, making it possible for a mismatch between Databricks and open source. If an unmanaged Delta table is updated from Databricks, the Delta log will get corrupted and the pipeline will fail. E.g. performing the OPTIMIZE on the unmanaged Delta table in Databricks will add a new table property leading to failure on streaming side because open source is yet to have that feature. This is what I faced initially when using 3.1.0:

Delta failed to recognize the row tracking property on table XYZ. To prevent this, all WRITE operations should be performed through open source, with Databricks used only for READ operations. To avoid accidental updates, access to schemas and tables should be controlled through UC.

Spark & Delta Compatibility

This is not a big challenge but something to remember, Databricks runtime wraps Spark and Delta with compatible versions, with open source we need to make sure these two stay compatible with each other.

💡Checkout compatibility: https://docs.delta.io/latest/releases.html

Vacuum Job

Databricks handles the clean up of Delta Table through automatic VACUUM, which is not the case in open source. We need to schedule a VACUUM batch job similar to OPTIMIZE. This is recommended to save storage costs.

💡Learn more about Vacuum: https://docs.delta.io/0.4.0/delta-utility.html#vacuum

Spark Optimization

Open Source Spark means you need to optimize your WRITE and READ operations yourself. One good example is the WRITE operation could lead to small file problem making READ and UPDATE very slow.

💡OPTIMIZE becomes the bottleneck as it has to perform compaction on many small files.

The problem has few solutions from setting the right number of cores to performing the optimized write. In Streaming, you need to find the balance between WRITE and READ performance.

If you are looking for a similar transition, then I hope this article was helpful.

Related Content:

💬Leave a comment to help others with more challenges and solutions.