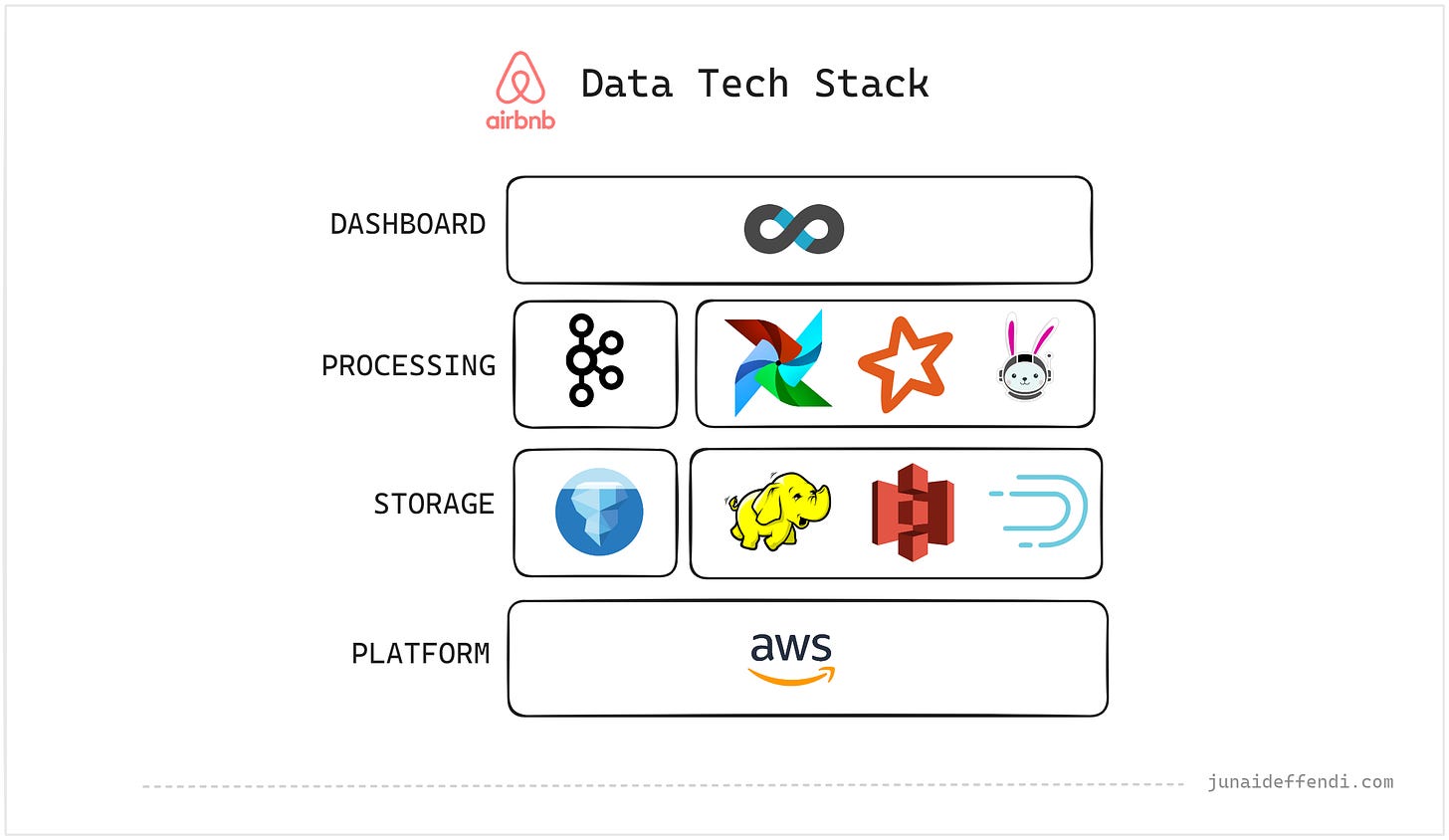

Airbnb Data Tech Stack

Learn about the Data Tech Stack used by Airbnb to process billions of data points every day.

Airbnb is another one of the big tech company that deal with massive volume of data. As per this article, Airbnb data ingestion processes more than 35 Billion events per day. Airbnb data stack is based on open source solutions from like Kafka and Airflow which we will go into detail in a bit. Today, lets dive into the tech stack from Data Engineering perspective.

Airbnb have played a vital role in defining the Data Engineering role, they have also contributed several successful projects to open source.

Content is based on multiple sources including Airbnb Blog, Open Source websites, etc.

Lets go over high level into each component:

Platform

AWS

Airbnb leverage AWS as their cloud platform and utilizes lot of AWS services from front end to back end, from online to offline.

Storage

HDFS

HDFS has been a core part of Airbnb Data Infrastructure containing tens of petabytes of data running in the Hadoop Clusters on AWS EC2 instances. HDFS provides storage with Hive support.

Iceberg

Airbnb have recently started to move to Iceberg from traditional Hive format to take their data platform to next level through open table format features like ACID capabilities, time travel, data compaction, etc.

S3

S3 has been used widely along side HDFS for storage solution. Although now it is part of the modernization, moving away from HDFS. S3 and Iceberg work together to provide a seamless Lakehouse architecture.

Druid

Airbnb use Druid to solve real time analytical use cases. It seamlessly connects with multiple sources to provide real time insights. Read more about how Airbnb leverage Druid.

Processing

Kafka

Airbnb upstream architecture is event driven, and to handle the millions of events per second, Airbnb deploy large clusters of open source Kafka.

Airflow

Airflow is heavily used at Airbnb for batch pipeline orchestration. Since Airflow was created at Airbnb, they have been using and evolving the Orchestrator as per their needs while giving back to the community as well.

Spark

Airbnb use Spark for both real time and batch processing workloads, Spark Streaming with Kafka while Spark Batch jobs with Airflow.

Trino

Trino is used as SQL layer on top of the new Lakehouse that Airbnb have been working towards. This provides data consumers ability to write SQL to read large scale data directly from s3.

💡Airpal (Presto) may still be used today for querying legacy data. But Trino as per the recent Trino Summit is the interactive compute engine for adhoc analysis.

Dashboard

Superset

Airbnb use the open source tool Superset (founded within Airbnb) for dashboards and visualizations. All the internal data storages are integrated with Superset to provide seamless experience.

💡Airbnb have several in house tooling, e.g. Metis for data management, Minerva for Metrics.

Related Content:

💬Airbnb seem to have many moving pieces and its likely that multiple teams use different solutions. Let me know in the comments if I missed anything.