5 Real Time Pipeline Architecture on Cloud

Data Engineering Streaming Pipeline Architectures from my experiences working in multiple companies leveraging AWS, GCP and open source services.

Real Time or Streaming have been on the rise in the recent years, companies have been doing this in various ways, Change Data Capture and Event Sourcing through Lambda architecture, however recently Kappa design approach has been dominant and teams are trying to leverage it to make their system simple and easy to maintain while keeping one source of truth for various data assets with real time decision making capability.

Batch Processes are still important and will not go away anytime in the future, they are mostly becoming downstream processes of the Streaming Pipeline.

In this article, I will share few different architecture that you can consider when building your streaming infrastructure. I will keep the scope of this article very high level.

👉 This article will focus on the architectures that I have mostly worked on.

Components

For Streaming Pipelines, we need certain set of tools and technologies, lets first look at those from general perspective.

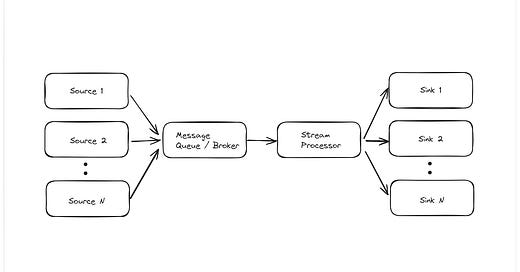

A typical pipeline has the following structure:

Source(s) -> Message Queue/Broker -> Stream Processor -> Sink(s)

Source:

A service or application that produces the data (aka producer), it can be through event sourcing or change data capture. Example:

Event Sourcing: Mobile or Web Apps

CDC: Databases (AWS RDS)

Message Queue/Broker:

In order to handle messages coming from producer, a message handler is needed, which can be a queue or a broker. Example:

Queue: AWS SQS, RabbitMQ, GCP PubSub

Broker: Apache Kafka, AWS Kinesis

💡Message Queues do not persist the data while Log Based Brokers keeps the data on disk allowing the users to achieve idempotency at scale similar to batch processes.

Since broker persist data on disk, they can also be considered as a storage layer or database.

Stream Processer:

A stream processor reads the message from the queue/broker depending on the configuration and performs transformations, aggregations etc. Example:

Apache Spark (Structured Streaming), Apache Flink, Apache Beam

Sink:

Data storage layer that persist data in the format and structure produced by stream processor that downstream consumers are looking for. Example:

Object Stores (AWS S3), Data Lake Formats (Apache Iceberg), Data Warehouse (Snowflake), Message Broker (Apache Kafka)

Typically these downstream consumers are batch processes, however, another common pattern is a streaming pipeline becoming a producer of another streaming pipeline.

Architecture

Lets look into some of the architectures by integrating different technologies in the above pipeline template.

There is no right and wrong, depends on use case, resources, current platform, cost etc.

The following architectures can solve the two common data engineering pipeline patterns; Change Data Capture and Event Sourcing.

Pipeline #1

One of the common approach from past when log based message brokers were not that popular, however it is still used today. This leverages managed services from AWS.

Application: Data source that sends messages

AWS SNS: Publish and Subscribe service, fans out messages to each subscriber

AWS SQS: Message queue that processes messages in FIFO

Microservice: Custom service that reads the messages from queue, running on AWS infra e.g. EKS

AWS S3: Object store for storing data

💡When using queue, its important to consider handling failed messages through Dead Letter Queue, else messages could be lost.

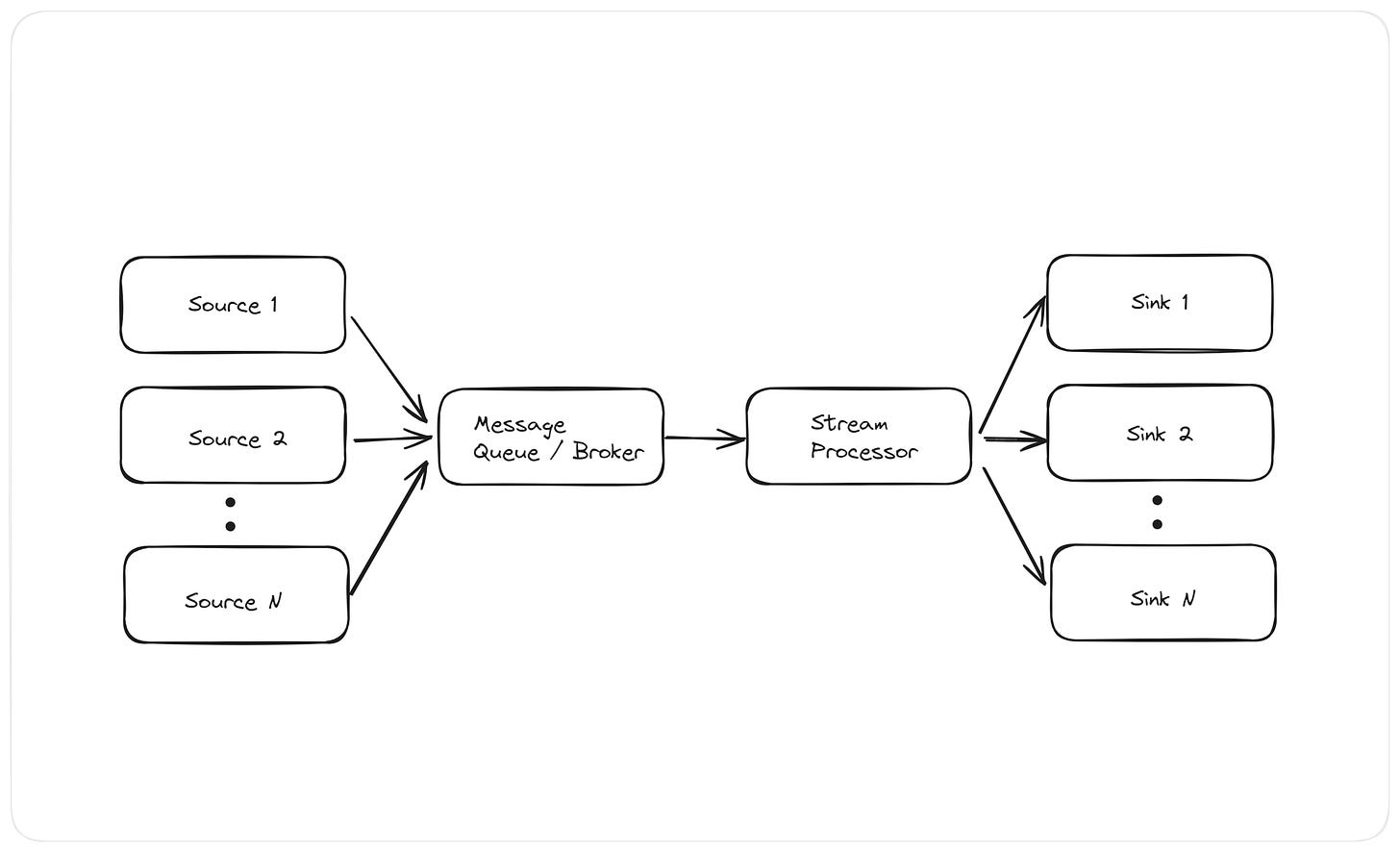

Pipeline #2

Another approach leveraging AWS managed services, easy to deploy and maintain. Spark Streaming and Delta can run on Databricks managed service or using AWS infra e.g. EMR and S3.

Application: Data source that sends messages

AWS Kinesis: Log based message broker for handling data at scale

Apache Spark (Structured Streaming): Stream Processing engine that processes data in micro batches

Delta Lake: Open Table Format supporting ACID transactions on files, e.g. S3.

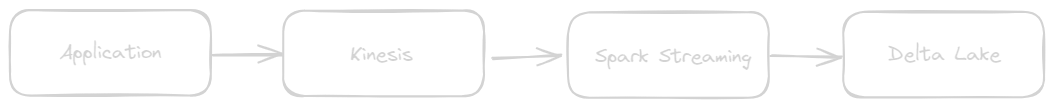

Pipeline #3

Pipeline design specifically for Change Data Capture, uses database logs to capture changes. Read detailed post on this: Real Time CDC Pipeline

AWS RDS: Relational Database Service, can spin multiple types of database e.g. MySQL, Postgres etc.

AWS DMS: Database Migration Service, migrates data from one system to another

AWS S3: Object store for storing data

💡Database

logis also used in data recovery and database monitoring. e.g.binlogfor MySql.

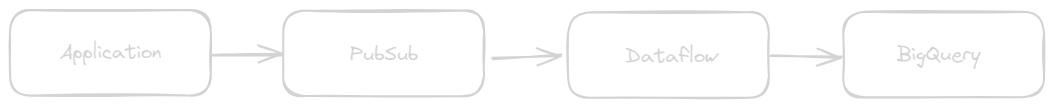

Pipeline #4

A Google Cloud Platform fully managed pipeline, very easy to build and manage, no infrastructure management is required, just couple of clicks from UI can build the pipeline. Related reading: Event Driven Pipeline

Application: Data source that sends messages

GCP PubSub: Message queue service

GCP Dataflow: Managed Apache Beam data processing unified engine for processing large amount of data in real time

GCP BigQuery: Data Warehouse for storing data

👉 Migrated from a open source Kafka and custom microservices design to this GCP managed one in my previous company KHealth.

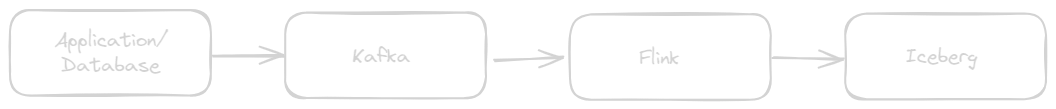

Pipeline #5

I have not used this exact pipeline, but this has been a very common industry standard pattern that's why I thought it deserves to be in this list, companies like Netflix and Adobe leverages these open source frameworks to build real time pipeline.

Application/Database: Data source that sends messages (Connecting to Database will require additional setup e.g. Kafka Connect or Apache Nifi)

Apache Kafka: Open Source Log based Streaming platform with high throughput and low latency

Apache Flink: Unified data processing engine with advanced configurable features

Apache Iceberg: Open Table Format supporting ACID transactions on files, e.g. S3 and GCS. (Similar to Delta)

💡AWS does have managed services for Kafka and Flink.

Most of the pipeline architectures shared above are the ones that I have worked with in my career, there are many different alternatives to each component and you can mix and match them as per your use case, highly depends on what platform you are using and what Engineering Culture looks like towards OSS.

Leave a comment or message if interested in understanding more about each one in detail.