Orchestrating Data Built Tools jobs with Airflow looks pretty straight forward, but in reality it comes with multiple different approaches dealing with different design patterns to solve a complex issue. DBT Cloud has its own scheduler and many teams that have less technical folks are happy with that in doing basic schedules without the need of external dependencies, but when the dependency management gets complicated you still have to use a tool that can solve those challenges smoothly, and the most popular one is the Airflow.

In this article, the goal is to give some approaches with their pros and cons when deciding to schedule DBT jobs via Airflow. With my experience more on the DBT Core (Open Source), this article will mainly be evolved around it. However, a similar pattern can be implemented using the DBT Cloud using the DBT APIs. Also, this article is best to read if you have a basic understanding of both DBT and Airflow.

At KHealth, we self hosted DBT on our Kubernetes Cluster and migrated our models from Airflow BQOperator to DBT KubernetesPodOperator, however, one of the biggest challenge we faced at the time of migration was the design of Airflow DAG for the DBT Jobs, in simple words identifying the relationship between DBT DAG and models with Airflow DAG and Tasks.

There are multiple ways to design, let’s first understand the common terminologies in DBT and Airflow.

DBT has DAG consisting of one or more Models

Airflow has DAG consisting of one or more Tasks

Let’s assume we have DBT with this basic model with the following directory structure

models/

example/

jobA.sql

jobB.sql

jobC.sql

schema.ymlWhere the lineage looks like jobA -> jobB -> jobC

Also, consider each job has its own test defined in schema.yml.

Running locally or manually would be very simple.

dbt run -m example

dbt test -m exampleAlso, assume that we have an external dependency of a python script which depends on jobA. Let’s try to schedule that into multiple ways using Airflow.

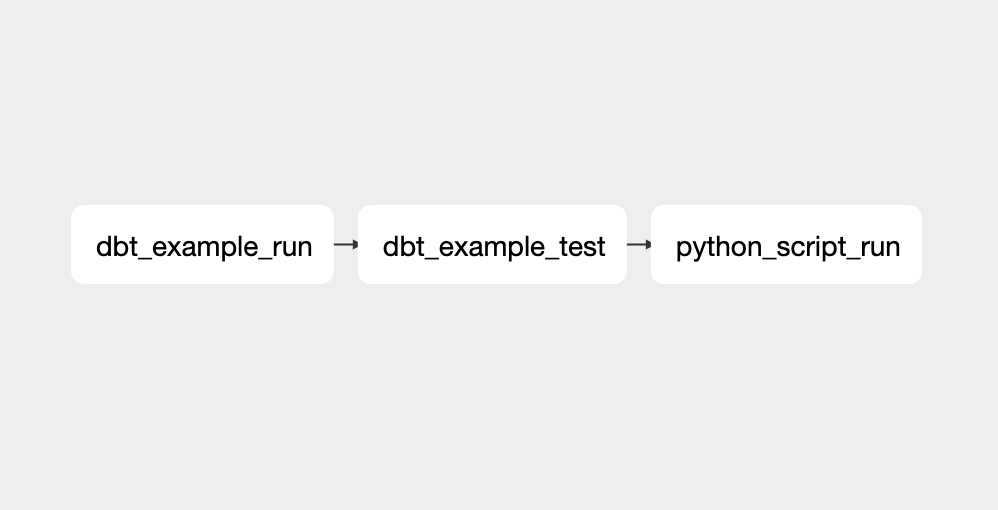

One Airflow Task per DBT DAG

This is the simplest approach and easiest in terms of scheduling the DAG. In our case, three jobs will become one Airflow task with its three tests combined into one test task, it will trigger the python script once the whole example model is completed.

Pros

Easy to schedule, especially for non tech folks if they contribute to shared Airflow.

Making the most of DBT by running the multiple models, everything or per tag with one single command, just like you do from your local machine.

Cons

Lack of observability and visibility, in order to find which model failed in Airflow we will have to debug via logs.

Partial rerunnability, this approach does not allow to rerun partial dags, it’s either full rerun or no run.

External dependencies, no functionality to trigger external jobs like python script after a certain model is completed. Can only run once the whole dag is completed.

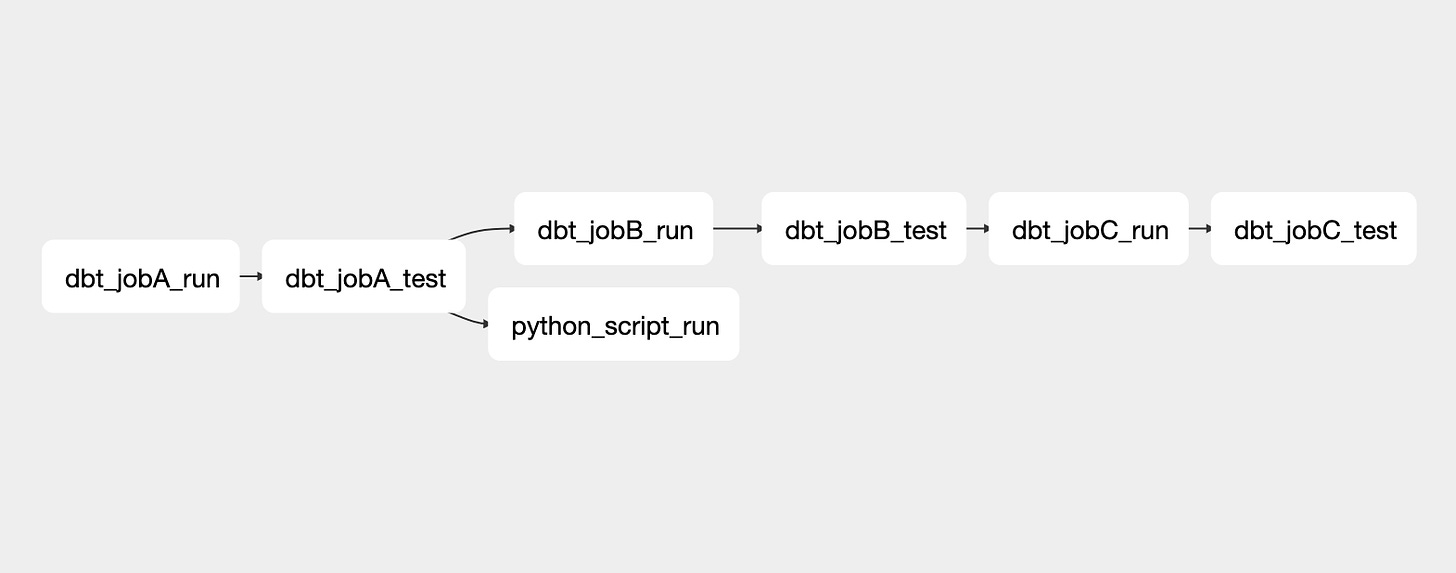

One Airflow Task per DBT Model

The straightforward way is to just split the DBT model per task on Airflow, three jobs will become three tasks with their three tests and the lineage you see in DBT would be exactly the same for Airflow.

Pros

Detailed observability and visibility in terms of failure, logging and alerting on each step.

Partial rerunniability, easy to run portion with the power of Airflow

External dependencies, easy to trigger external jobs like python scripts right after a specific model or a test.

Cons

Not utilizing the power of DBT

DBT will rebuild all the shared source/base tables

Not using the + dbt function to run upstream or downstream parts

Lot more work needs to be done on Airflow, like knowing the name of models, the structure and dependencies, this could be automated by going through the compiled configuration file but still a lot of work required by Engineering.

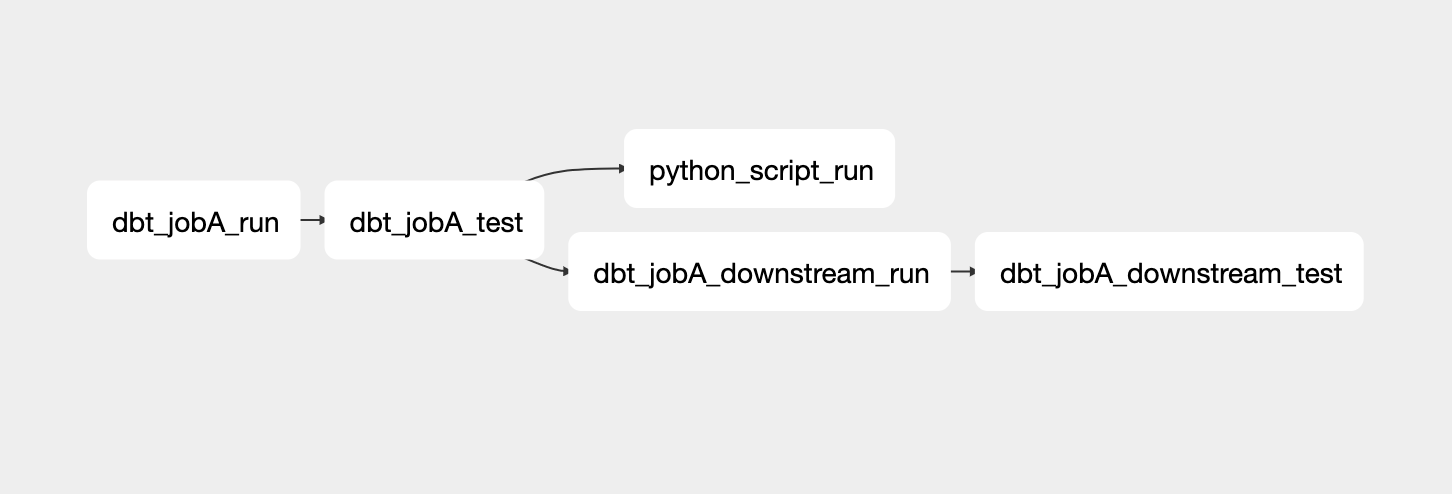

Hybrid Approach

This one is my favourite which gets the best from both worlds, however, comes with some inconsistencies if initially planned improperly. The main idea is to start with the first approach and move onto the second if some standards are met.

Standards or you may say Rules can be defined as:

If a DBT DAG has many big models meaning it’s expensive to recompute, it should be split into multiple tasks, not one to one, it could be splitting five models into two tasks and using the DBT downstream/upstream functionality. Giving more visibility and rerunnability with saving the cost of recompute.

If a DBT model has some external dependency, it should be split into multiple tasks, just split the required models and their tests into separate tasks, thus allowing it to trigger external jobs right after it completes.

DAG should look like this:

In this case, we had to split into jobA and job downstream (B and C, runs using +) because of the dependency of python script.

If you try to understand, the Hybrid basically answered the two concerns from the starting approaches.

Approach 1: Why wait for the whole DAG to complete before triggering the python script job.

Approach 2: Why run jobB and jobC separately if we can use the power of DBT

Now imagine if there are hundreds of models, the level to scale and maintain the existing DAGs with the first or second approach will get so complex.

As mentioned earlier, the hybrid approach comes with some possible cons, like hard to manage, following the standards and difficult to understand from the Airflow view etc. But it still gives the best from both of the worlds.

I hope this article was helpful in understanding the relationship between DBT and Airflow. Feedback is greatly appreciated.